Experts say that deepfake technology, which is barely four years old, may be at a decisive point

Microsoft, December 9, 2021

The AI security risk assessment framework, which has just been published, is another step towards empowering organizations to build robust auditing, monitor and improve the security of artificial intelligence systems.

Protecting smart systems from attackers is a pressing issue. Counterfit is being actively downloaded and researched by various organizations to actively protect their artificial intelligence systems. In addition, the previously unveiled Machine Learning Evasion Challenge saw a record number of entrants compared to the previous year. All of this proves a commitment to growth and the ability to secure artificial intelligence systems. However, realizing interest in actions that can increase the security level of AI systems is not so easy and obvious it seems.

Therefore, the new framework in collaboration with Microsoft has the following characteristics:

- It provides a comprehensive perspective on the security of artificial intelligence systems;

- It describes in general terms the threats of machine learning and recommendations for eliminating them;

- It makes it easier for organizations to conduct risk assessments.

Experts believe that for a comprehensive assessment of security risks for an AI system, it is necessary to look at the entire life cycle of system development and deployment. To fully protect the AI model, we need to consider the security of the entire supply chain and the management of the AI systems.

Quanta Magazine, December 7, 2021

Some artificial noise can trick neural networks, which is why researchers are looking for a solution to the problem using neuroscience.

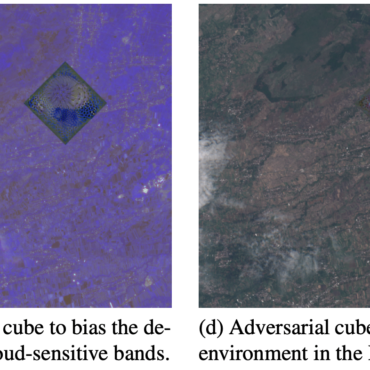

Artificial intelligence sees things that the human eye often does not notice. Despite the development of artificial intelligence, smart systems can still be easily fooled. And sometimes for this it is enough to add quite a bit of noise imperceptible to the human eye, but capable of deceiving artificial intelligence – in order, for example, to change the classification result.

Nicholas Papernot of the University of Toronto and his colleagues have found a way to trick machine learning models that process language by interfering with the input text in a process that is invisible to humans. However, even tiny additions, such as individual characters, can damage the understanding of the text by the model, and such actions will have consequences for human users – as they will have to interact with the result of the computation that has been imperceptibly influenced.

A very big problem lies precisely in the fact that the buffoon is necessary to deceive the car and is completely invisible to humans. A number of innovations have already been demonstrated by researchers in their attempts to find a way of protection in conjunction with biological research. While there has been significant progress in this area lately, there is still a lot to be done before such methods are accepted as proven solutions, as today not everyone is convinced that biology is the place to look.

WHBL, December 13, 2021

Experts say that technology, which is barely four years old, may be at a decisive point.

One online store offered shoppers the ability to create synthetic, AI-generated multimedia, also known as deepfakes, with the same app being advertised differently on dozens of adult sites. Today, ordinary viewers will find it difficult to distinguish many fake videos from reality. In addition, deepfakes have become as accessible as possible –

almost everyone who has a smartphone has access to them and does not require special knowledge.

“When the entry point gets so low that it doesn’t require any effort, and an inexperienced person can create a deepfake pornographic video that is very difficult without consent, this is the inflection point,” commented Adam Dodge, lawyer and founder of online security. EndTab company.

It is becoming obvious that such universal accessibility and popularity of technology can lead to rather big problems, and regulation of this issue is a priority task at the moment.

Mariethier Schaake, director of international policy at the Stanford University Cyber Policy Center and a former member of the EU Parliament, explained that current digital laws, including the proposed Artificial Intelligence Act in the US and the GDPR in Europe, may govern elements of deepfake technology, but that they are now a number of shortcomings that need to be corrected in the future.