AI Red Teaming Talk at ML Conference Munich 2021

ML Conference is the go-to event for Machine Learning enthusiasts, the world’s leading machine learning experts and innovators as they share their ideas and experience. The founder of Adversa Alex ...

Adversarial ML admin todayDecember 9, 2021 57

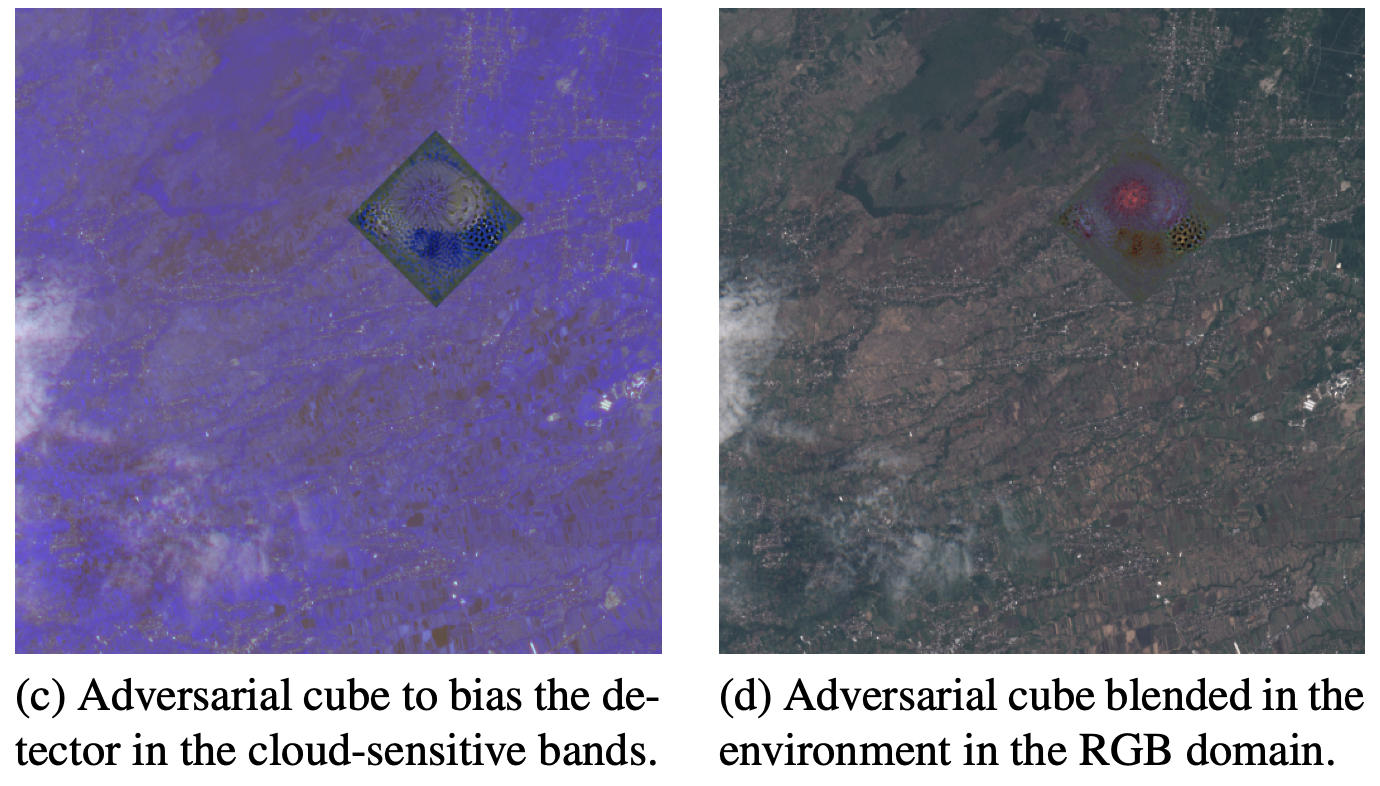

Data collected by Earth observation satellites is cloud dependent, and cloud detection is often done through deep learning and is critical to running EO applications. However, only clear sky data is transmitted on the downlink to save precious bandwidth. In this study, Andrew Du, Yee Wei Law, Michele Sasdelli, Bo Chen, Ken Clarke, Michael Brown, and Tat-Jun Chin investigate the vulnerability of deep learning cloud detection to attackers. By optimizing the adversarial pattern and superimposing it on a cloudless background, the neural network is tuned to detect clouds. Test attacks were generated in the multispectral region.

Thus, a variety of multi-loop attacks become possible, for example, it is possible to influence the displacement of confrontation in the cloud-sensitive ranges and visual camouflage in the visible ranges. Defense strategies against hostile attacks were also investigated.

Automatic speech recognition (ASR) systems are actively used, for example, in applications for voice navigation and voice control of household appliances. The computational core of ASR is deep neural networks (DNN), which can be easily attacked by intruders.

To help validate the ASRS, Xiaoliang Wu and Ajitha Rajan demonstrate methods in this paper. They automatically generate black box, non-targeted adversarial attacks carried through ASR. Most of the work on adversarial testing of ASR focuses on targeted attacks, that is, on the generation of audio samples taking into account the output text, however, such methods cannot be carried over as they are tuned to the DNN (whitebox) structure within a specific ASR.

However, the presented method attacks the signal processing stage of the ASR pipeline, which is used in most ASRs, and the generated adversarial audio samples will not be distinguishable by human hearing. This makes it possible to manipulate the acoustic signal with a psychoacoustic model that keeps the signal below human perception thresholds. The portability and effectiveness of the methods was assessed using three popular ASRs and three sets of audio input data, using metrics – the WER of the output text, similarity to the original audio, and the success rate of an attack on different ASRs. These test methods can be carried over between ASRs, and the samples of adversarial sounds show high success rates, WER and similarity to the original sound.

Deep Learning malware detectors are used for early detection of dangerous cybersecurity behavior, but their sensitivity to variants of malware poses security risks. The creation of such adversarial variants greatly increases the resistance of DL-based malware detectors to them. The problem has led to a whole host of studies, Adversarial Malware example Generation (AMG). Their goal is to create evasive variants of adversarial malware that retain the malicious functionality of that malware.

In this study, James Lee Hu, Mohammadreza Ebrahimi, and Hsinchun Chen

demonstrate that a new DL-based causal language model provides one-shot evasion by treating the contents of a malware executable as a byte sequence and training a Generative Pre-Trained Transformer (GPT). Our proposed method, MalGPT, performed impressively on a real-world malware dataset sourced from VirusTotal with an evasion rate of over 24.51%.

Written by: admin

Company News admin

ML Conference is the go-to event for Machine Learning enthusiasts, the world’s leading machine learning experts and innovators as they share their ideas and experience. The founder of Adversa Alex ...

Adversa AI, Trustworthy AI Research & Advisory