Enhancing the safety of autonomous vehicles in critical scenarios

Tech Xplore, July 26, 2022

Ingrid Fadelli

The framework, developed by researchers at the University of Ulm in Germany, is designed to detect potential threats around self-driving vehicles in real time. It could help make them safer in urban and high-dynamic environments.

The research team’s paper is based on one of their previous research which pointed at providing autonomous vehicles with situational awareness of their environment. It could make them more responsive in dynamic unknown environments.

According to one of the researchers Matti Henning, the main idea of the work is that instead of a 360 perception field, resources are allocated only to those perception fields that are relevant to the current situation, due to which it is possible to save computing resources and increase the efficiency of automated vehicles. However, after all, if we limit the field of perception, then the safety of automated vehicles will decrease?

This is where our threat region identification approach comes into play: regions that might correspond to potential threats are marked as relevant in an early stage of the perception so that objects within these regions can be reliably perceived and assessed with their actual collision or threat risk. Consequently, our work aimed to design a method solely based on online information, i.e., without a-priori information, e.g., in the form of a map, to identify regions that potentially correspond to threats, so they can be forwarded as a requirement to be perceived.

– Henning interpreted

Researchers develop technique to bypass AI intrusion detection

Computing, 26 July 2022

John Leonard

Researchers have demonstrated an attack technique that can fool a machine learning assisted network intrusion detection system (NIDS) into allowing malicious DDoS messages to pass as legitimate traffic.

The latest network intrusion detection systems use models to define attacks. Thus the systems help incident response teams minimize the security risks.

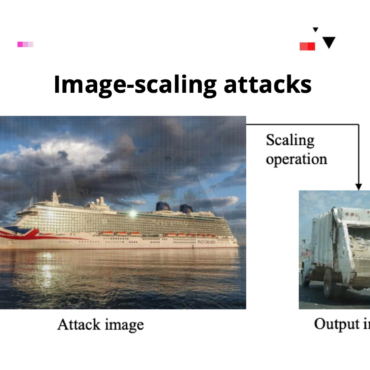

While exploring the systems, researchers fool a deep learning model trained for intrusion detection (or NIDS) into miscategorising the malicious traffic, or DDoS as a normal traffic. Adversarial perturbations, which are modifications, are used in categorization. For instance, if a few pixels are changed, a picture of a dog will be defined as a picture of a cat. This is a widely spread technique for training image recognition and NLP models.

The authors of the current research altered perturbation attack algorithms titled Elastic-Net Attack on Deep Neural Networks and TextAttack. They turned them on their own intrusion detection model.

This is considered successful, generating large volumes of false positives and false negatives.

Results show that it is possible and relatively easy to deceive a machine learning NIDS, reaffirming the notion that these deep learning algorithms are quite susceptible to adversarial learning.

– Researchers say

Despite the fact that researchers haven’t tested the attack on commercial systems, specialized adversarial attacks could be dangerous for ML detection systems.

Read the full overview following the link in the title.

How ML Model Explainability Accelerates the AI Adoption Journey for Financial Services

KDnuggets, July 29, 2022

Yuktesh Kashyap

Financial companies AI to improve operational and business-related tasks, including identifying fraud. AI improves and automates the efficacy of human operations to reduce manual work. However, security issues arise.

By 2025, according to Gartner, 30% of government and large enterprise contracts for the purchase of AI solutions and need the application of explainable and ethical AI.

AI in the finance industry will be advanced in the future. But it will leave the stakeholders with no understanding of the reasons a model failed or its particular decision. This is so-called a black box, which is challenging, as in this case AI doesn’t provide explainability and transparency. Financial organizations are exploring them to solve this problem.

Explainable AI can be perfect but difficult to build when they become more complex. There are also warnings about competitors reverse-engineering the proprietary ML. In addition, malefactors can carry out adversarial attacks that result in crashes. Adversarial examples are a part of the explainability concern. That is why some financial companies employ state-of-the-art algorithms while maintaining explainability.

Therefore, implementing AI and ML model explainability will open the straight way for the future. Financial organizations need AI partners with good expertise to ensure explanation and transparency while meeting compliance.

To learn use cases where AI in financial services are extensively used and why explainable models are crucial there, read the full article following the link in the title.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.