Carrying out vulnerabilities in machine learning models as part of the study is necessary for further successful work on potential attacks and defenses. And here is a selection of the most interesting studies for June 2022. This time the topic of various Backdoors in AI is getting more attention, as we predicted in our report. The next huge area for research after evasion attacks is backdoors. Now we see an increasing more variations of such attacks including backdoors for federated learning and the ones hidden in the model architecture. Enjoy!

Federated learning (FL) is a paradigm for distributed machine learning. Now large companies including Google and Apple deploy FL that serve billions of requests daily. Its paradigm allows training models across consumer devices without aggregating data. So it is vital to ensure FL is robust.

Nonetheless, deployed FL systems have an inherent vulnerability during their training and are prone to backdoor attacks.

The previous research papers have demonstrated the possibility of adding backdoors to federated learning models, but the backdoors are not durable. This means that if the process of uploading poisoned updates are terminated, they don’t stay in the model afterwards, and an inserted backdoor may die until deployment.

In this work, the authors Zhengming Zhang, Ashwinee Panda, Linyue Song, and others find that the durability of state of the art backdoors can be doubled. They introduce Neurotoxin, a new model poisoning attack and refers to a simple modification to existing backdoor attacks. It is built to insert more durable backdoors into FL systems.

At a high level, Neurotoxin increases the robustness of the inserted backdoor to retraining. It succeeds by attacking underrepresented parameters.

You can read the research by following the links to the title.

The community is watching increasing threats to machine learning. The risks are connected with backdoored neural networks and adversarial manipulation. An adversary can modify models in the supply chain. With a specific secret trigger, which is present in the model’s input, it behaves as it is not intended.

In this paper, researchers Mikel Bober-Irizar, Ilia Shumailov, Yiren Zhao, Robert Mullins, and Nicolas Papernot propose a novel type of backdoor attacks lurking in model architectures. The attacks are real and can survive a full retraining from the ground up.

Current backdoors are straightforward since others can unknowingly reuse open-source code published for a backdoored model architecture. The researchers evaluate the attacks on computer vision and show that the vulnerability is pervasive in a variety of training settings. In case an adversary changes the architecture using typical components, he or she may launch backdoors that don’t die during re-training on a new dataset, and the model becomes sensitive to a certain trigger inserted into an image. The authors dub these backdoors “weights- and dataset-agnostic”.

Therefore, with this research, a new class of backdoor attacks is demonstrated and carried out against neural networks. The authors demonstrate how to design architectural backdoors for 3 threat models and deliver the requirements for their successful operation. Read the full research paper by following the link in the title.

Deep learning (DL) technologies have exceeded human opportunities. Due to its wide demand, it has become the core of computer vision development, natural language processing, and other vital areas like medical diagnosis. Deep neural networks (DNNs) are essential in deep learning. They are now preferable for complicated tasks and come in handy in various vision applications. However, their reliability is put at risk. With further explorations, deep neural networks have been found vulnerable to specifically built adversarial attacks that damage prediction results or lead to data leakage.

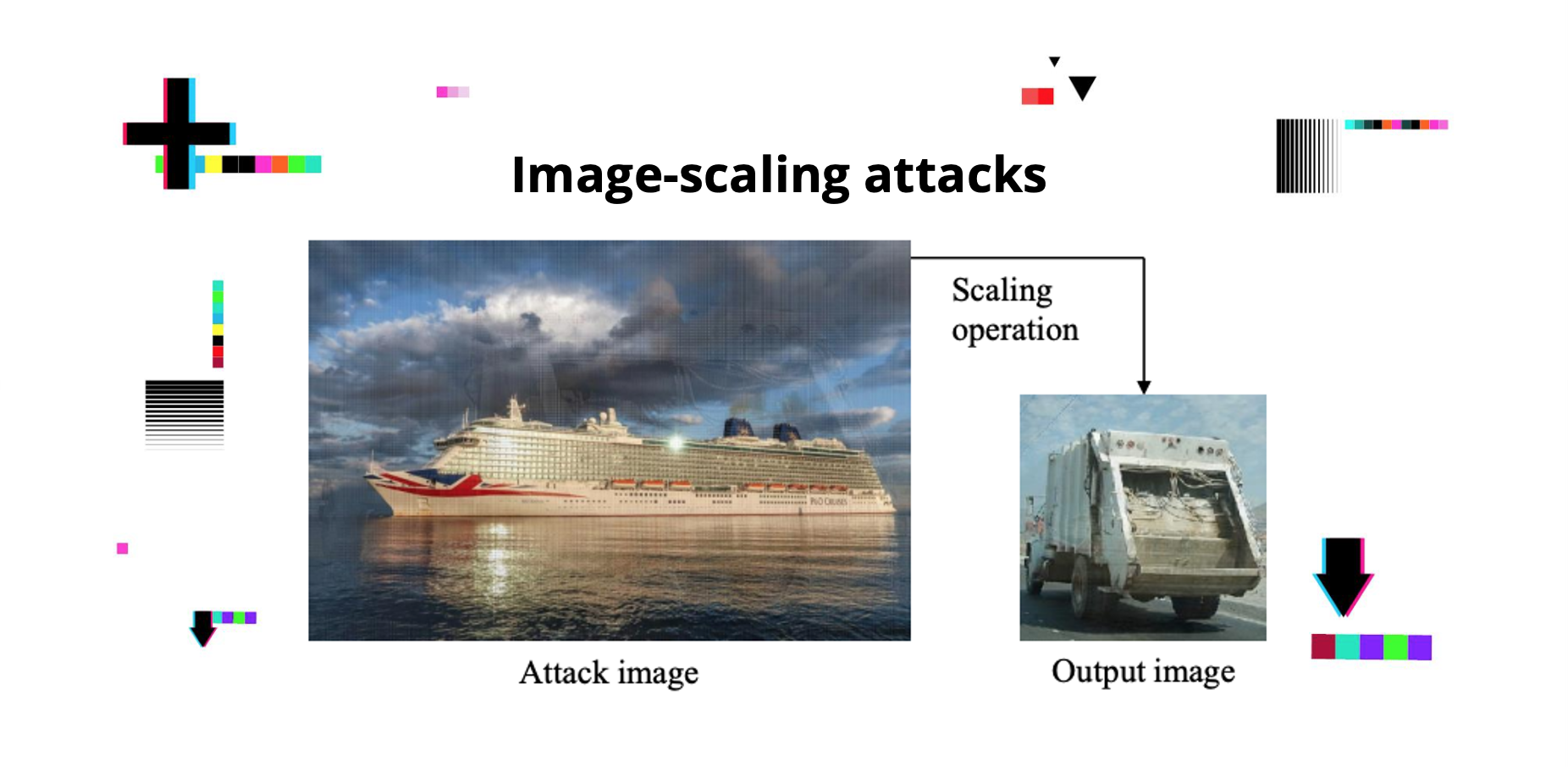

This work relates to the image signal processing (ISP) pipeline. Numerous vision devices use it as it helps make RAW-to-RGB transformations and is implemented for image processing. Researchers Junjian Li and Honglong Chen create a so-called image-scaling attack with a target on an ISP pipeline. A certain adversarial RAW can turn into an attack image of a different appearance if scaled.

The experiments demonstrate that the adversarial attacks can develop adversarial RAW data against the target ISP pipelines with high attack rates.

The researchers create an image-scaling attack targeting the ISP pipeline. As a matter of fact, the impacts of both the ISP pipeline and data preprocessing are considered in vision applications.They experiment and assess their effectiveness.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.