AI errors can lead to various incidents, which is why it is so important to monitor the safety and ethics of smart systems

Biometric Update, March 18, 2022

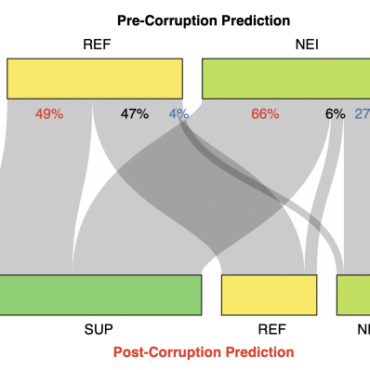

The researchers considered that digital photographs, especially those common on the Internet, could be altered enough to poison facial recognition models trained on altered images.

Poisoning will be considered that the model incorrectly identifies scraped images. The altered photographs must be so finely crafted that they can never be deciphered by biometric data speculators – and if a better algorithm appears, the broken code is considered cracked. Most of these images were taken from social networks that prohibit scraping. This practice causes a great resonance in society.

CSET, March 2022

Vulnerabilities are a global problem for all artificial intelligence, where they can eventually cause sabotage or illegal dissemination of information.

At the same time, the exchange of information about vulnerabilities in itself can play into the hands of both defenders and attackers. Because machine learning models are built from data, vulnerabilities can be difficult to identify, but can often be very easy for attackers to exploit. For example, attacks on ML may involve modifying input data causing an error in an otherwise correctly functioning system, tampering with the underlying ML model or its training data, and stealing the ML model or training data. An attacker could exploit bugs in ML software to gain access to running computers.

A new review by Andrew Lohn and Wyatt Hoffman discusses a number of key differences between vulnerabilities in traditional and machine learning systems. In addition, experts raise the question of how the processes of disclosure of vulnerabilities and their elimination depend on these differences.

Citizens express fraud concerns over deepfake technology

Digital Journal, March 18, 2022

While smart technologies have penetrated our lives very tightly, we have become very dependent on identity verification.

A problem in the event of risks, in particular those bearing the confidentiality of confidentiality information. For example, according to some data, today more than half of the US population does not know what deepfakes are. We have already spoken quite a lot about dipfaykazkhs themselves. In particular, deepfake refers to a type of AI that is used to create the most realistic fake media possible. While some of these images or videos may be created for legal purposes, such as replacing a deceased actor in a film, some (and even many!) deepfakes can also be used for criminal activities.

However, many people have already expressed their concerns about the cybersecurity associated with this technology. According to the survey, people believe that social networks are the area of greatest risk for deepfakes. A lot of people in particular are afraid that a scammer will create social media accounts on their behalf and scam people online.