Carrying out attacks on machine learning models as part of the study is necessary for further successful work on potential vulnerabilities. And here is a selection of the most interesting studies for February 2022, which is a special edition on content moderation and anti-fake engine attacks that are extremely relevant now.

In connection with a number of recent events, the issue of determining the authenticity of information on the Internet has become particularly acute. Image attribution, which is the association of an image with a reliable source, has become a new tool in the fight against misinformation on the Internet. Recently, models of deep visual fingerprinting have been investigated in this regard, but it has been found that they are not resistant to hostile examples.

Therefore, researchers Maksym Andriushchenko, Xiaoyang Rebecca Li, Geoffrey Oxholm, and others set out to demonstrate how to create valid adversarial images that can easily lead to image misattribution. The researchers also paid attention to an approach to prevent stealth attacks by intruders on deep visual fingerprinting models using robust contrast learning. The models resulting from the study turned out to be much more reliable. Their efficiency was also high on unperturbed images and even when working in a database with millions of images. According to the researchers, this stability is also relevant for other types of imperceptible perturbations that are invisible during training. Finally, the researchers demonstrate the training process for a race-resistant image comparator model to detect editorial changes in matching images.

Automated fact checking is a technology that helps limit the spread of disinformation on the Internet that is as relevant today as ever. One strategy for making such decisions involves testing the claims by selecting corroborating or refuting material from textual sources. The problem may be that a similar check is required and the sources of evidence, which can also be affected by disinformation. Moreover, some modern NLP tools can create coherent, fabricated content that will only make life easier for scammers who decide to falsify data producing adversarial disinformation for fact-checkers.

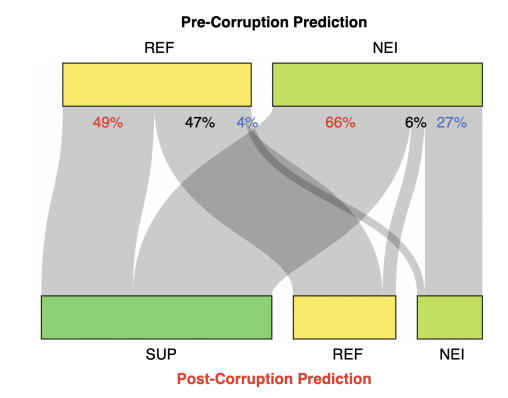

In a new study, Yibing Du, Antoine Bosselut, and Christopher D. Manning examine the sensitivity of automatic fact checkers to synthetic adversarial evidence. This happens under two conditions. The first condition is the AdversarialAddition, where documents are produced and placed in an evidence repository accessible to the fact-checking system. In the second case, we are talking about AdversarialModification – in it, the existing source of evidence documents in the repository are automatically changed.

According to the results of the study, these systems experience a significant decrease in performance during these types of attacks. In addition, the threat of modern NLG systems as disinformation generators is currently growing.

In a new paper, Shahrukh Khan, Mahnoor Shahid, and Navdeeppal Singh evaluate the adversarial robustness of BERT models trained on German hate language datasets. The work also includes two new attacks at the level of characters and words of the white box, which in turn expands the range of attacks available. Among other things, the experts compare the two new character-level defense strategies and evaluate their reliability with each other.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.