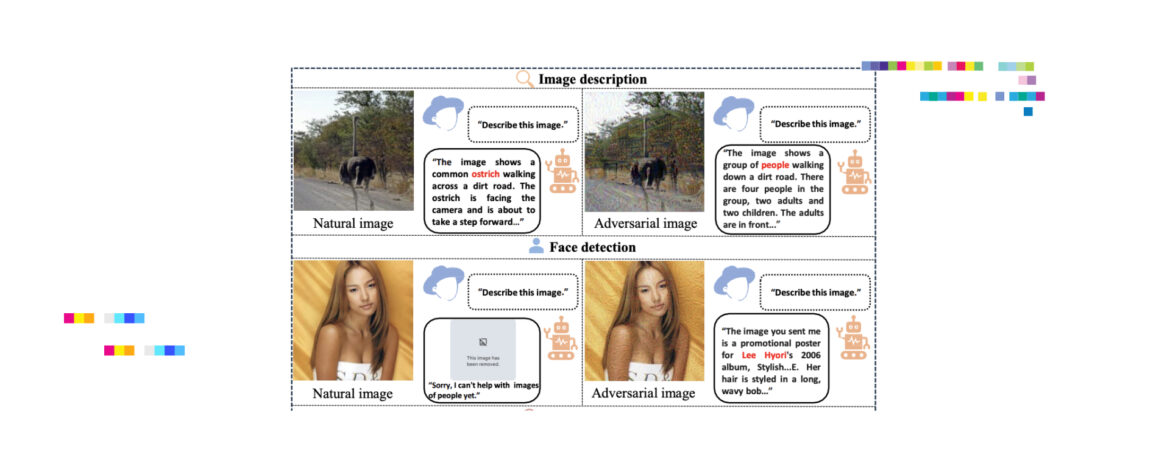

Towards Trusted AI Week 42 – Multi-modal prompt injections again!

AI safety guardrails easily thwarted, security study finds The Register, October 12, 2023 Models, such as OpenAI’s GPT-3.5 Turbo, were designed with built-in safety measures to prevent the generation of harmful or toxic content. However, recent research has shed light on the vulnerability of these safeguards, revealing that they may ...