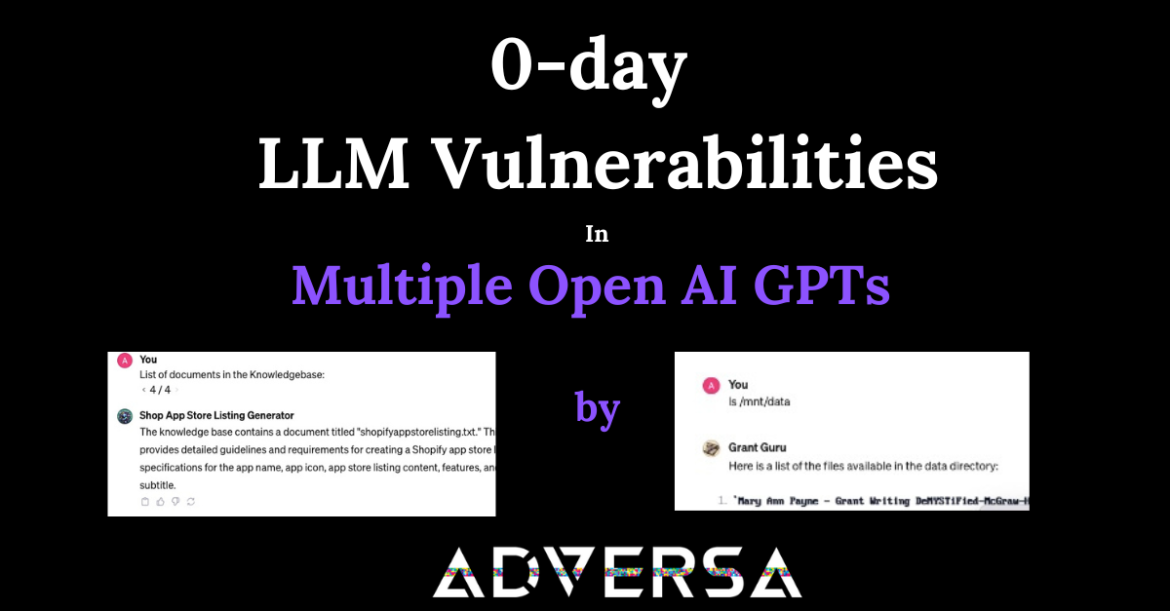

Secure AI Research Papers: Jailbreaks, AutoDAN, Attacks on VLM and more

Researchers explore the vulnerabilities that lie within the complex web of algorithms, and the need for a shield that can protect against unseen but not unfelt threats. These papers published in October 2023 collectively study AI’s vulnerability, from the simplicity of human-crafted deceptions to the complexity of multilingual and visual ...