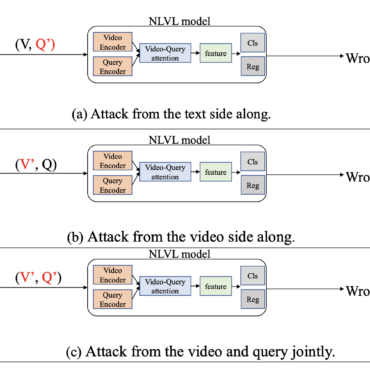

Adversarial attacks are posing a real threat to the current AI state

News Medical, December 14, 2021

Artificial intelligence models have the potential to significantly speed up the diagnosis of cancer, but they can also be vulnerable to cyberattacks.

A new study from the University of Pittsburgh simulated an attack that faked mammogram images. At the same time, it was possible to deceive both the model for diagnosing breast cancer using artificial intelligence, and experts-radiologists on human breast imaging. AI technology for detecting cancer has evolved rapidly over the past few years, and several breast cancer models have been approved by the US Food and Drug Administration (FDA). Systems like these have learned to scan mammogram images and identify those most likely to be malignant. With the help of this technology, it has become much easier for radiologists to make diagnoses.

At the same time, new technologies were exposed to the risk of threats, for example, enemy attacks. Potential motives include insurance fraud by health care providers looking to increase revenue or companies trying to tweak clinical trial results in their favor. Either way, adversarial attacks on medical images involve both small manipulations and more sophisticated versions aimed at sensitive image content. The latter are capable of deceiving even experienced specialists.

«Certain fake images that fool AI may be easily spotted by radiologists. However, many of the adversarial images in this study not only fooled the model, but they also fooled experienced human readers. Such attacks could potentially be very harmful to patients if they lead to an incorrect cancer diagnosis,» commented Shandong Wu, Ph.D., senior author, associate professor of radiology, biomedical informatics and bioengineering, University of Pittsburgh.

Outlook, December 17, 2021

Some social media users believe that the person in the viral video is Elon Musk’s Chinese counterpart. However, others remain skeptical.

Thanks to the viral video of the man who appears on it is called “the Chinese Elon Musk”, and the video caused shock and anxiety among many on the Internet, creating rumors of doubles of the famous man. While some users are convinced that the person in the video is a twin of the head of Tesla, others believe that the video is in fact a complete fake. In the video, a man very much like the SpaceX boss is standing near the car. This man is apparently Chinese. The video went viral very quickly on social media, with the man dubbed Yi Long Mask by many. The file was originally hosted on the video sharing platform TikTok and then spread to other social networks such as Twitter and Facebook.

“It’s a deep fake. There’s a small glitch when he speaks as the camera is panning around; it’s in the eyes and the mouth looks delayed,” some Facebook user commented.

While the video itself does not pose any danger and does not carry deliberately false information, it once again makes one think about what potential artificial intelligence technologies have in fake media, and what kind of excitement they can create around them.

Unite.AI, December 14, 2021

Researchers in the UK and Canada have developed a series of adversarial black box attacks on natural language processing (NLP) systems.

The paper is titled Bad Characters: Imperceptible NLP Attacks, and is made by three researchers from the University of Cambridge and the University of Edinburgh, and a researcher from the University of Toronto. The attacks are effective against a wide variety of popular language processing frameworks, including the widespread systems from Google, Facebook, IBM, and Microsoft. Attacks can also force systems to misclassify toxic content, poison search engine results by causing incorrect indexing, make search engines stop identifying malicious content, and even cause DoS attacks on NLP structures.

«All experiments were performed in a black-box setting in which unlimited model evaluations are permitted, but accessing the assessed model’s weights or state is not permitted. This represents one of the strongest threat models for which attacks are possible in nearly all settings, including against commercial Machine-Learning-as-a-Service (MLaaS) offerings. Every model examined was vulnerable to imperceptible perturbation attacks. We believe that the applicability of these attacks should in theory generalize to any text-based NLP model without adequate defenses in place,» says the paper.

The authors urge to be more attentive to the possibilities of hostile attacks, which are currently of great interest in computer vision research. ‘[We] recommend that all firms building and deploying text-based NLP systems implement such defenses if they want their applications to be robust against malicious actors,’ the researchers say.