Deepfakes appeared a long time ago, but their distribution is just beginning

The New York Times, October 28, 2021

Clearview AI has collected over 10 billion photos from the public Internet to create a facial recognition tool. This tool was supposed to be sold to law enforcement agencies to identify unknown people.

This product has drew a lot of criticism related to accusations of the illegality of such actions, unethicalness and lack of verification. Two years after law enforcement started using the app, Clearview’s algorithm has gone through third-party testing for the first time and has gone surprisingly well: Clearview was ranked in the top ten for accuracy along with NTechLab from Russia, Sensetime from China and other more well-known organizations.

However, a test conducted by Clearview demonstrates the level of accuracy of the algorithm for correctly matching two different photographs of the same person, but not the accuracy of matching an unknown face among 10 billion photographs.

While the National Institute of Standards and Technology is a unique federal agency that conducts its own tests of facial recognition providers every few months, Clearview has introduced their algorithm for verification test – a type of facial recognition that someone can use to open a smartphone.

Clearview AI CEO Hoan Ton-Tat called the results an unmistakable validation of his company’s product.

In terms of issues, Clearview AI was sued in state and federal courts in Illinois and Vermont for collecting photographs of people without their permission and searching for them using facial recognition, and the company was criticized by other vendors who expressed concerns. that the situation around Clearview AI will cause problems for the facial recognition industry as a whole.

Live Science, October 30, 2021

Perhaps the infamous War of the Worlds radio broadcast in 1938 had something to do with modern deepfake.

The reworked version of a classic sci-fi tale told by Orson Welles is supposed to be a very early example of deepfake, which refers to synthetic media. The “War of the Worlds” Oct. 30, 1938 broadcast recast the science-fiction novel by H.G. Wells as a real-time attack on the United States by Martians. The broadcast took place on the eve of World War II and allegedly played on listeners’ alarm about the state of the world at the time.

The episode reportedly raised a lot of panic and raised thoughts of a real attack, even though the episode mentioned several times that the story was fictional. According to other sources, it is reported that there were not enough listeners to create real panic in society, but the case still went down in history. Despite the panic, a new podcast argues that manipulation of information on the broadcast continues to teach us lessons that are relevant today. That being said, media literacy is a complex topic that is evolving very quickly, and in turn, the University of Michigan has described the differences between fake news, disinformation and disinformation, which are categories of manipulation that have been regularly discussed in the past few years.

“We don’t always know who created something, why they made it, and whether it’s credible,” notes CommonSense.org a non-profit aiming to teach media literacy skills to children.

Channel Asia, October 27, 2021

Criminals are focusing on authentication technologies and improving authentication methods.

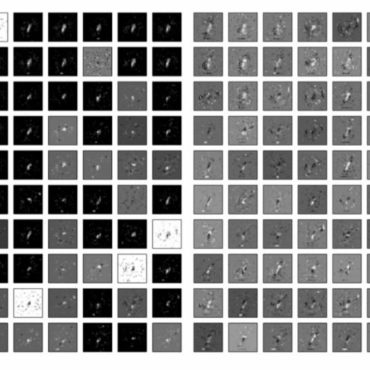

Despite the fact that so far, attacks using the deepfake technology are not as widespread as some others, it is believed that their popularity will only increase in the near future, which is already being discussed on the darknet. For example, on the same darknet, you can already find even whole publicly available tutorials on how to carry out such attacks – for example, both visual and audio types of deepfakes are described.

“It’s often been said that pornography drives technology adoption and that was certainly true of deepfakes when they first appeared. Now the technology is catching on in other less salacious circles – notably with organised cyber crime groups,” commented Mark Ward, senior research analyst at the Information Security Forum.

In particular, an interest in deepfakes has been seen among malicious groups specializing in sophisticated social engineering attacks. Typically, these groups compromise e-mail and force company employees to fraudulently provide classified financial information to the organization. At the same time, the potential of a deepfake in such attacks is enormous, since with its help knot-makers will be able to perform even more complex operations while leading to large financial and reputational losses. Another potential area for the development of deepfake attacks is bypassing biometric identification. When attackers succeed in mimicking people’s voices and faces well enough to gain access to critical information and finance, companies can suffer huge losses as well.