Knowledge about artificial intelligence and its security needs to be constantly improved

Forbes, October 14, 2021

AI-assisted voice cloning is reportedly being used in huge robberies in the UAE amid warnings about the use of the new technology by cybercriminals.

In 2020, a very well-conceived fraud was committed where they used the technology of “deep voice” – a bank manager in the United Arab Emirates received a call from a person whose voice he recognized – the director of a company with which he spoke earlier. The fraudulent activities resulted in $ 400,000 stolen funds that were transferred to accounts in the United States. It is believed to have been an elaborate scheme involving at least 17 people that sent the stolen money to bank accounts around the world.

This attempt was more successful, although it is only the second known case of artificial voice manipulation. Another similar case occurred a year earlier, when scammers tried to steal $ 240,000 in 2019 from the director of a British company.

“Audio and visual deep fakes represent the fascinating development of 21st century technology yet they are also potentially incredibly dangerous posing a huge threat to data, money and businesses,” says Jake Moore, a former police officer with the Dorset Police Department in the U.K. and a current cybersecurity expert at security company ESET.

Technology Review, October 12, 2021

Researchers are not convinced that deep learning models are “black boxes” by which it is impossible to understand what is happening inside.

This Person Does Not Exist site is capable of giving you a human face that looks hyper-realistic but completely fake. Basically, the site is able to give you an infinite number of such non-existent persons, which are created by artificial intelligence of persons created by a generating adversarial network (GAN) – an AI that learns to create realistic but fake examples of the data on which it learns. Such faces are already beginning to be actively used in films or advertisements, however, in the work titled This Person (Probably) Exists, it says that all these artificial faces are not as unique as they might seem at first glance, and sometimes have a huge resemblance to people. that were used in the material to train the system. This work is one of a series of studies that challenges the popular idea that neural networks are black boxes that reveal nothing about what processes are going on inside.

Ryan Webster and other experts from the University of Caen Normandy in France used a membership attack to expose the training data. These attacks exploit small differences between the way the model processes the data it was trained on – and thus has been encountered thousands of times before – and invisible data.

The results stunned the researchers – in many cases, it was found that several photographs of real people were in the training data that appeared to match the fake faces created by the GAN, revealing the identities of the people on whom the AI was trained.

Azo Quantum, October 13, 2021

Over the past decade, artificial intelligence has experienced great progress, including being used to predict protein structures – a significant advance in AI has taken place in quantum computing.

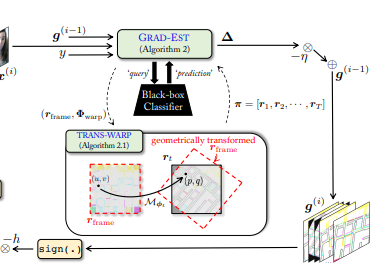

The latter sphere, however, is basically only at the initial stage of development. In conventional machine learning, the vulnerability of deep neural network classifiers to adversarial examples has been actively explored since 2004. These classifiers can be very vulnerable: adding a carefully crafted disturbance to the original legitimate sample can mislead the classifier into making incorrect predictions. Recent studies have highlighted the vulnerability aspect of quantum classifiers both through theoretical analysis and numerical modeling.

In particular, a new research article by the Beijing-based National Science Review, researchers from IIIS, Tsinghua University, China covers the universality properties of adversarial examples and perturbations for quantum classifiers for the first time. The work was dedicated to the following questions: if there is any universal adversarial examples that could fool different quantum classifiers, and if there are any universal adversarial perturbations, which when added to different legitimate input samples could make them become adversarial examples for a given quantum classifier.

In general, the results of this work reveal the most important aspect of the universality of adversarial attacks for quantum machine learning systems. This can serve as a valuable guide for future practical applications of both near and future quantum technologies in machine learning or, more broadly, artificial intelligence.