Unite.AI, September 17, 2021

Researchers from China have introduced a new copyright protection method for image datasets that are used to teach computer vision.

The new method is based on the fact that the images are first get ‘watermarked’ and then the decryption of the “clean” images takes place through the cloud platform for authorized users only. It was demonstrated that training a machine learning model on images protected by copyright leads to a catastrophic drop in the accuracy of the model. After testing the system on two popular open source image datasets, it was found that it was possible to reduce the accuracy significantly.

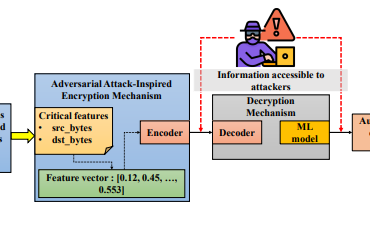

The work is authored by researchers at the Nanjing University of Aeronautics and Astronautics, and covers the use of a Dataset Management Cloud Platform (DMCP), a remote authentication framework providing the same kind of telemetry-based pre-launch validation. The protected image is created using feature perturbations, an adversarial attack technique developed at Duke University in North Carolina in 2019. The unmodified image is embedded in the distorted image by combining blocks and transforming blocks; the sequence containing information about the paired blocks is then inserted into a temporary intermediate picture using AES encryption, the key to which will later be extracted from the DMCP during authentication. The steganographic least significant bit algorithm is then used to embed the key. This process is called modified reversible image transformation (mRIT). The mRIT procedure is essentially reversed during decryption, with the “clean” image restored for use in training sessions.

The Register, September 16, 2021

Some makeup applied to your face can help you avoid recognition by artificial intelligence systems, the researchers concluded.

The described new method is a kind of adversarial attack with fine tuning of the input data, which should trick machine learning algorithms and cause them to incorrectly identify objects in the input data. The experiment of researchers at Ben-Gurion University of the Negev in Israel and Japanese IT giant NEC is still at the laboratory level. Within the framework of the project, there is an artificial intelligence system in the form of a black box. She scans faces for banned people and sends notifications when suspicions arise.

“In this paper, we propose a dodging adversarial attack that is black-box, untargeted, and based on a perturbation that is implemented using natural makeup,” the researchers commented. “Since natural makeup is physically inconspicuousness, its use will not raise suspicion. Our method finds a natural-looking makeup, which, when added to an attacker’s face, hides his/her identity from a face recognition system.”

San Diego Union Tribune, September 15, 2021

Truepic, a San Diego-based “deep fakes / cheap fakes” company, has developed a software platform that can authenticate digital photos and videos on the Internet. The company was founded in 2015 and is part of a group of companies that are battling hard-to-find AI-based technologies that manipulate digital photos and videos on the Internet.

“As the tools to generate entirely synthetic photos and videos continue to become widely available, this distrust will continue to grow, ultimately casting doubt around the authenticity of all digital media,” commented Jeffrey McGregor, chief executive of Truepic.

The way the company operates is quite unique – Truepic uses cryptography, transparency, and other techniques that can help identify minor edits and distinguish them from major manipulations.

“From the instant that light hits the camera sensor, we can secure the capture operation, so we know that the pixels, date, time and location are authentic,” explained Craig Stack, founder and president of Truepic. “Then we seal that information into the file and can verify that what came out of the camera hasn’t been modified.”