AI serves not only for good. Adversaries can use it and advance their attacks

Facebook apologized for the error of its AI-based software

Facebook representatives expressed their regrets for the company’s artificial intelligence software in connection with the following incident. The system began to offer for viewing “videos about primates” to those users who had previously watched videos with Black men.

After the incident, Facebook turned off the topic recommendation function and stated that they were investigating the cause of such an error. A Facebook spokesperson commented that the automated recommendation was an “unacceptable error” and apologized to users who faced it.

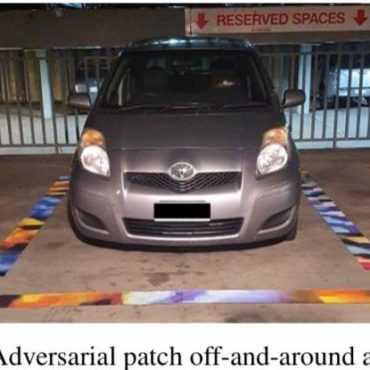

Due to the fact that learning models are widely used in many critical applications, serious questions arise about their security. Adversarial machine learning has recently become an especially relevant topic that many researchers pay attention to.

Researchers at Carnegie Mellon University and the KAIST Cybersecurity Research Center recently introduced a new technique that uses unsupervised learning addressing available methods of adversarial attacks detection. The technique has benefits of ML explainability methods in order to detect the input data that might have experienced adversarial perturbation. The method has been presented at the Adversarial Machine Learning Workshop of the ACM Conference on Knowledge Discovery and Data Mining 2021.

According to specialists from Duke University, there is a new way to protect the smart intelligence system from adversarial image-modification attacks. Computer vision systems are at the root of a number of brilliant new automated technologies, and for this reason they are increasingly targeted by attackers, some of these attacks can be really dangerous.

According to researchers, the problem often lies in how the algorithms are trained. In this case, adding a few imaginary numbers to the mixture could fix this problem. The research focuses on gradient regularisation, which is a training technique created to reduce the “steepness” of the learning terrain.

“This reduces the number of solutions that it could arrive at, which also tends to decrease how well the algorithm actually arrives at the correct answer. That’s where complex values can help. Given the same parameters and math operations, using complex values is more capable of resisting this decrease in performance,» comments Eric Yeats, a doctoral student at Duke University.