Machine learning has come a long way, but it needs to meet safety criteria

Synced, August 10, 2021

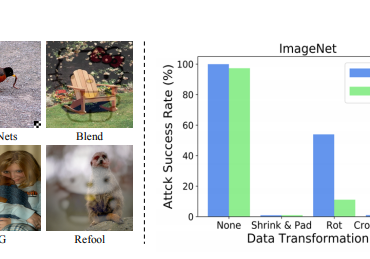

Researchers have proposed the Feature Importance-Aware Attacks able so significantly enhance the transferability of adversarial examples.

Deep neural networks are increasingly used in real-world applications, so their security and resistance to external threats is becoming a burning issue. In particular, the issue is especially acute in critical areas for example in autonomous transport, when health and even human life can be threatened.

For example, the so-called transfer-based attacks, which refer to the black-box method, are of particular concern as they are more flexible and practical than other attacks.

Researchers from Zhejiang University, Wuhan University and Adobe Research demonstrated the Feature Importance-Aware Attacks (FIA) to keep this question up-to-date. This greatly enhances the transferability of adversarial examples, surpassing the efficiency of modern available transferable attack methods.

Science, August 13, 2021

Machine learning has become widespread today, but before you can safely apply ML technology, you need to make sure that smart systems are truly trustworthy.

Machine learning has advanced significantly over the past decade, and it continues to evolve. In addition to simple tasks, machine learning is increasingly being posed with tasks related to rather critical issues, such as areas, mistakes in which can directly affect the life and health of citizens. Therefore, before deploying machine learning in critical areas such as health or autonomous transportation, you need to make sure that smart systems meet the necessary criteria for safety and trustworthiness.

Even at the stage of developing machine learning models, it is necessary to provide protection against several types of attacks at once, for example, from Poisonong or from Adversarial manipulation.

The article examines the main modern threats to machine learning, and also touches on the questions of what criteria the ML model must meet before their use can be called safe and trustworthy.

Penn State News, July 28, 2021

Although online fake news detectors and spam filters are becoming more sophisticated, scammers manage to trick them into inventing new techniques, including the “universal trigger” technique.

This method is based on learning and consists in tricking an indefinite amount of input data with a specific phrase or set of words. As a result of a successful attack, there will be more fake news in your feed and more spam in your inbox.

In contrast, researchers at the Penn State College of Information Sciences and Technology have come up with a ML framework that can effectively defend against similar types of attacks in natural language while processing applications 99% of the time.

The model is called DARCY and is based on a cybersecurity concept of a «honeypot» that catches potential attacks on natural language processing applications, including fake news detectors and spam filters.

“Attackers try to find these universal attack phrases, so we try to make it very attractive for them to find the phrases that we already set,” commented Thai Le, doctoral student of information sciences and technology. “We try to make the attacking job very easy for them, and then they fall into a trap.”