The face recognition system opens up a number of possibilities for us, while being extremely vulnerable

Daily News, July 15, 2021

It’s no secret that face recognition systems still raise a number of questions in society. Despite this, their application can significantly help law enforcement officials. However, even this does not take into account a number of serious enough security problems of these systems that can threaten the safety of society.

At the heart of this is a banal insecurity of your biometric data, which are used for identification in facial recognition systems: as soon as a leak occurs, the data can be compromised.

And if you can easily open a new bank account in the event of a financial data leak, acquiring a new face is not an easy task. If your bootood face authentication credentials are stolen, an attacker can commit a wide variety of actions, from blackmail and fraud to tracking your location via a mobile device.

We can all do at least a little today to protect ourselves: for example, use multi-step identification and not use facial recognition to protect critical data.

Dark Reading, July 12, 2021

Artificial intelligence today is used in various fields of human activity – from trade to healthcare. Very often AI and cybersecurity are mentioned in a single context, and according to the author, it is worth considering these two areas in combination from three points of view: AI used by defenders, AI used by attackers, and adversarial AI.

Speaking about the use of AI for security purposes, it is worth mentioning that very often machine learning and deep learning are used to develop new security systems.For example, using smart systems, it is possible to play possible malicious scenarios in a laboratory environment, thus preventing them from occurring in real life.

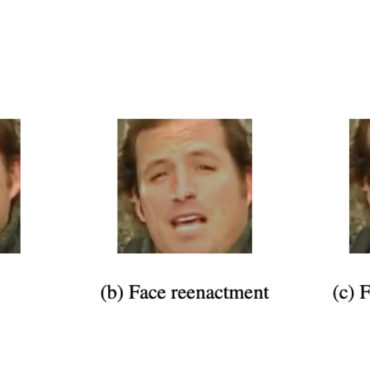

Artificial intelligence is also very often used by cybercriminals, as smart systems are extremely beneficial for them, for instance, from an economic point of view: they greatly help to reduce the cost of attacks. Deepfake social engineering attacks powered by AI-based technologies are also expected in the future. But in reality, AI can be used at almost every stage of an attack.

Finally, speaking about adversarial AI, it is worth saying that AI can be deceived quite easily – sometimes it only takes a few changed pixels for the system to produce an incorrect result.

The worst thing in this case is that even AI-based protection in this case turns out to be vulnerable and everything that can be used against any other AI-based systems can be applied against AI-based defense systems.

Wired, July 16, 2021

It was recently revealed that the Microsoft Windows Hello facial-recognition system can be unlocked with even a little hardware tampering (which shouldn’t be like that). The attack is made possible by the fact that Hello facial recognition can work with various third-party webcams, however, with those that have an infrared sensor in addition to the usual RGB sensor. It turned out that the system nevertheless does not look at RGB data and with the help of one straight-on infrared image of a target’s face and one black frame a device with this system can be unlocked.

“We tried to find the weakest point in facial recognition and what would be the most interesting from the attacker’s perspective, the most approachable option,” commented Omer Tsarfati, a researcher at the security firm CyberArk. “We created a full map of the Windows Hello facial-recognition flow and saw that the most convenient for an attacker would be to pretend to be the camera, because the whole system is relying on this input.”