Dark Reading, July 1, 2021

As a result of a collaboration between GitHub and OpenAI, an artificial intelligence system called Copilot emerged. The system was originally created in order to offer developers suggestions for code: it is expected that with the help of the new device, developers will be able to generate blocks of code based on semantic hints faster, thereby saving time.

However, the developers themselves notice that the system that any code should be tested for errors and vulnerabilities.

“There’s a lot of public code in the world with insecure coding patterns, bugs, or references to outdated APIs or idioms,” GitHub commented. “When GitHub Copilot synthesizes code suggestions based on this data, it can also synthesize code that contains these undesirable patterns.”

Mirage, July 1, 2021

Attacks on control systems in smart cars can have truly disastrous consequences, and the issue of safety is especially acute in this area. Despite the fact that it is generally believed that the human factor is a frequent cause of accidents with autonomous vehicles, smart cars are still not as invulnerable as they might seem at first glance.

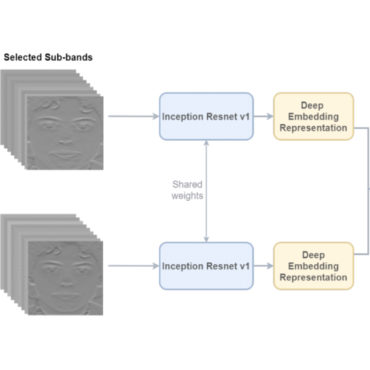

However, a recent study by researchers from Macquarie University’s Department of Computing sheds light on how drivers can detect and prevent such attacks in time.

“There are many different kinds of attacks that can occur on self-driving or autonomous vehicles, which can make them pretty unsafe,” says Yao Deng, who is a PhD candidate in Macquarie University’s Department of Computing.

According to the study, smart systems involved in autonomous vehicles can be subject to a number of attacks, and it is extremely important to eliminate vulnerabilities of a critical part of computer vision systems that are used to analyze images.

ITV, July 1, 2021

Deepfakes have long been a sharp-edged stone in the use of artificial intelligence and ethics. At the moment, the issue of the ethical application of smart technologies is so urgently in need of improvement that sometimes it turns out that a number of critical situations cannot be unambiguously resolved from the point of view of the law.

Recently, Helen Mort, from Sheffield, became another victim of the so-called ‘deep fake porn’. The situation was complicated by the fact that having discovered a faked image of her face used on internet pornography, the woman realized that the existing laws did not authorize her to do anything about the situation.

“This is a significant and growing global problem, we know that more and more deep-fakes are being made we know the technology is getting easier, we know there are more and more victims so unless we take steps to prevent this kind of abuse, to take steps against perpetrators it’s only going to grow,” commented professor Clare McGlynn, a legal expert.