Learning about AI security and leveling up face recognition systems defence

Dark Reading, April 27, 2021

Now everyone can learn about trusted AI from Adversa’s massive report. The paper describes why it is essential to pay attention to AI security, how adversaries can attack AI and what companies should do to defend themselves from such risks. The report consists of research of more than 2000 academic papers, real-world AI incidents and most threatening attacks, their impact on economies and businesses, but also review of different countries’ steps on the way to trusted AI, governmental initiatives and industry experts’ opinions.

“It’s only a question of time when we see an explosion of new attacks against real-world AI systems and they will become as common as spam or ransomware,” commented Eugene Neelou, co-founder and chief technology officer of Adversa.

Raising awareness among anyone who uses AI-based applications, working on developing defenses and being ready for attacks should be followed by improved AI systems actions and organizations performance.

Analytics Insight, April 28, 2021

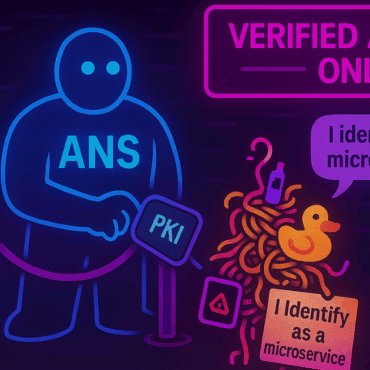

Face recognition systems (FRS) became very popular to use in security systems mostly. FRS uses the neural networks technology and machine learning, which makes it vulnerable to various attacks. The most widespread are presentation and digital attacks.

Basically, presentation attacks involve real-life perturbations like glasses or face mask to confuse the neural network. A human would easily identify them as a person, but AI can be easily fooled.

Another common attack is digital one and appears to be more difficult to deal with. Digital attack usually requires adding noise, changing images’ pixels to extent of 1% or less. These changes usually are not even visible to humans, and we can easily tell what we see at the picture with the naked eye. On the contrary, FRS can identify a human as some other object.

A smart thing to do is to embed adversarial attacks into FRS learning to make those attacks detected better and prevented in the future.

The Verge, April 27, 2021

If you thought that deepfakes are only applicable to humans, then here’s a surprise! Scientists have learned how to make geographical deepfakes that can be used in several ways and as you might have guessed sometimes not for the proper reason. Geographical deepfakes might be used to create fake-news wildfires or flood to mislead the geography researchers.

The worst part is using geographical deepfakes against military planners to show non-existent objects like likes or bridges misleading military forces. Vice versa, objects can be hidden as well.

Bo Zhao, an assistant professor of geography at the University of Washington and his colleagues have brought out a paper on the “deep fake geography”, the objective of which, according to Zhao “is to demystify the function of absolute reliability of satellite images and to raise public awareness of the potential influence of deep fake geography.