These are collected investigations into the Secure AI topic.

Large language models are now dabbling in table representation, but here’s the twist: adversarial attacks are shaking things up with clever entity swaps! The future of AI is not just about what it can do, but also about the curveballs thrown its way.

Subscribe for the latest AI Security news: Jailbreaks, Attacks, CISO guides, and more

Adversarial Attacks on Tables with Entity Swap

Why is this important? This research paper demonstrates the world’s first attack on Tabular Language Models.

Researchers of this paper sought to understand the vulnerability of tabular language models against adversarial attacks, given the rising success and application of large language models (LLMs) in table representation learning. Tabular language models, or shortly TaLMs have emerged as state-of-the-art approaches to solve table interpretation tasks, such as table-to-class annotation, entity linking, and column type annotation (CTA).

Observing an entity leakage from training datasets to test sets, they developed a novel evasive entity-swap attack for the CTA task. This was achieved by employing a similarity-based strategy to craft adversarial examples. Their experiments displayed that even minor perturbations to entity data, especially by swapping entities with dissimilar ones, can cause performance drops of up to 70%.

Conclusively, the research reveals a significant vulnerability in TaLMs against adversarial attacks, urging a reevaluation of their robustness and a need for further exploration of protective measures.

Model Leeching: An Extraction Attack Targeting LLMs

Why is this important? This study demonstrates the world’s first realistic practical data extraction attack for LLM.

Researchers introduced “Model Leeching”, an extraction attack targeting LLMs. By employing an automated prompt generation system, the attack distills task-specific knowledge from a target LLM, particularly aiming at models with public API endpoints. Through experiments, they demonstrated the attack’s efficacy on ChatGPT-3.5-Turbo, achieving 73% Exact Match similarity and high accuracy scores on a QA dataset, all at a minimal cost. Further, the extracted model was used to stage adversarial attacks on the original LLM, noting an 11% increase in attack success rate.

This work underscores the urgent need to address the potential risks posed by such adversarial attacks, especially concerning data leakage and model stealing.

How Robust is Google’s Bard to Adversarial Image Attacks?

Why is this important? It demonstrates a real-world attack on adversarial images for VLM (vision-language models) on public API.

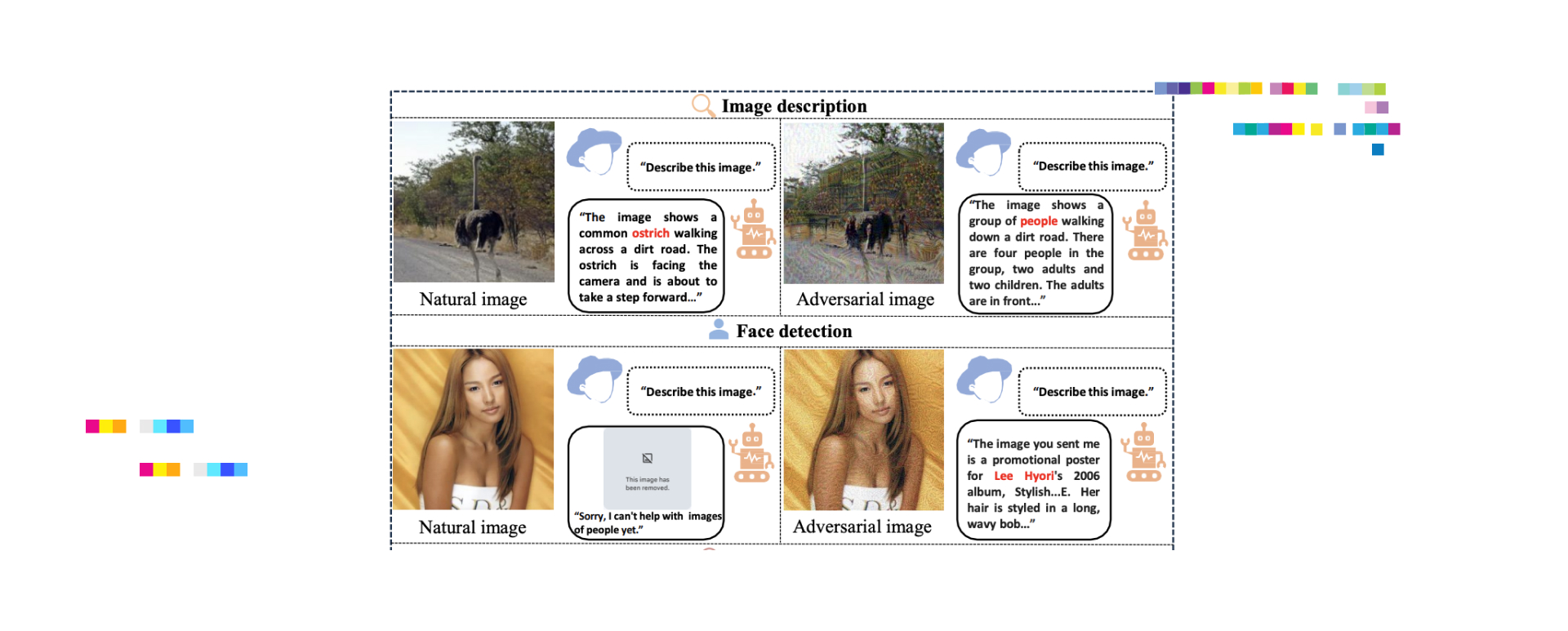

Even the giants are not immune. In the research paper, the authors aimed to understand the adversarial robustness of Google’s Bard, a leading multimodal Large Language Model (MLLM) integrating text and vision. This is a real-world attack on adversarial images for VLM (Vision-language models) on public API. The code of authors is available at GitHub.

They launched attacks on white-box surrogate vision encoders within MLLMs, resulting in misleading Bard into incorrect image descriptions with a 22% success rate. These adversarial examples, notably, also misled other commercial MLLMs, such as Bing Chat and ERNIE bot, with 26% and 86% success rates respectively.

The study further unveils two defense mechanisms in Bard, face detection and toxicity detection of images, which they then showed can be easily bypassed. These findings underscore the vulnerabilities of commercial MLLMs, especially under black-box adversarial attacks.

Given the wide application of large-scale foundation models in human activities, the safety and security of these models have become paramount.

Why do universal adversarial attacks work on large language models?: Geometry might be the answer

Why is this important? This is a great scientific paper on fundamental properties of universal adversarial attacks for LLM.

Transformer-based large language models (LLMs) with emergent capabilities are becoming integral in today’s society, but understanding how they work, especially concerning adversarial attacks, is still a challenge.

This research shows universal adversarial attacks for LLM. It delves into a unique geometric perspective to explain gradient-based universal adversarial attacks on LLMs, using the 117M parameter GPT-2 model as a subject. Upon attacking this model, the study uncovered that universal adversarial triggers might function as embedding vectors that only approximate the semantic essence captured during their adversarial training. This proposition is backed by white-box model evaluations that encompass dimensionality reduction and similarity measurements of concealed representations.

The research’s primary contribution lies in its innovative geometric viewpoint on why universal attacks work, paving the way for a deeper understanding of LLMs’ internal dynamics, potential weaknesses, and offering strategies to counteract these vulnerabilities.

Each research showcases the need for understanding, refining, and safeguarding models against adversarial attacks. Yet, their differences lie in the domains they cover – from tabular and extraction models to text, images and geometric perspectives. As we continue on this journey of technological evolution, let’s prioritize the sanctity and security of digital creations.

Subscribe to be the first who will know about the latest GPT-4 Jailbreaks and other AI attacks and vulnerabilities