Towards Trusted AI Week 24 – AI Red Teaming discussed at RSA

Knowledge about artificial intelligence and its security needs to be constantly improved How Do We Attack AI? Learn More at Our RSA Panel! Cloud Security Podcast by Google, June 6, ...

Trusted AI Blog + Adversarial ML admin todayJune 15, 2022 131

Carrying out attacks on machine learning models as part of the study is necessary for further successful work on potential vulnerabilities. And here is a selection of the most interesting studies for June 2022. Enjoy!

Deep Neural Networks (DNNs), which is a type of machine learning that mimics the way the brain learns. They are implemented in a lot of applications used in different fields including object recognition, autonomous systems, video, image, and signal processing and obtain great results. What counts here is that experiments have proved that DNNs are prone to adversarial attacks. enhanced DNNs vulnerabilities to malicious attacks. They can damage the security and undermine prediction.

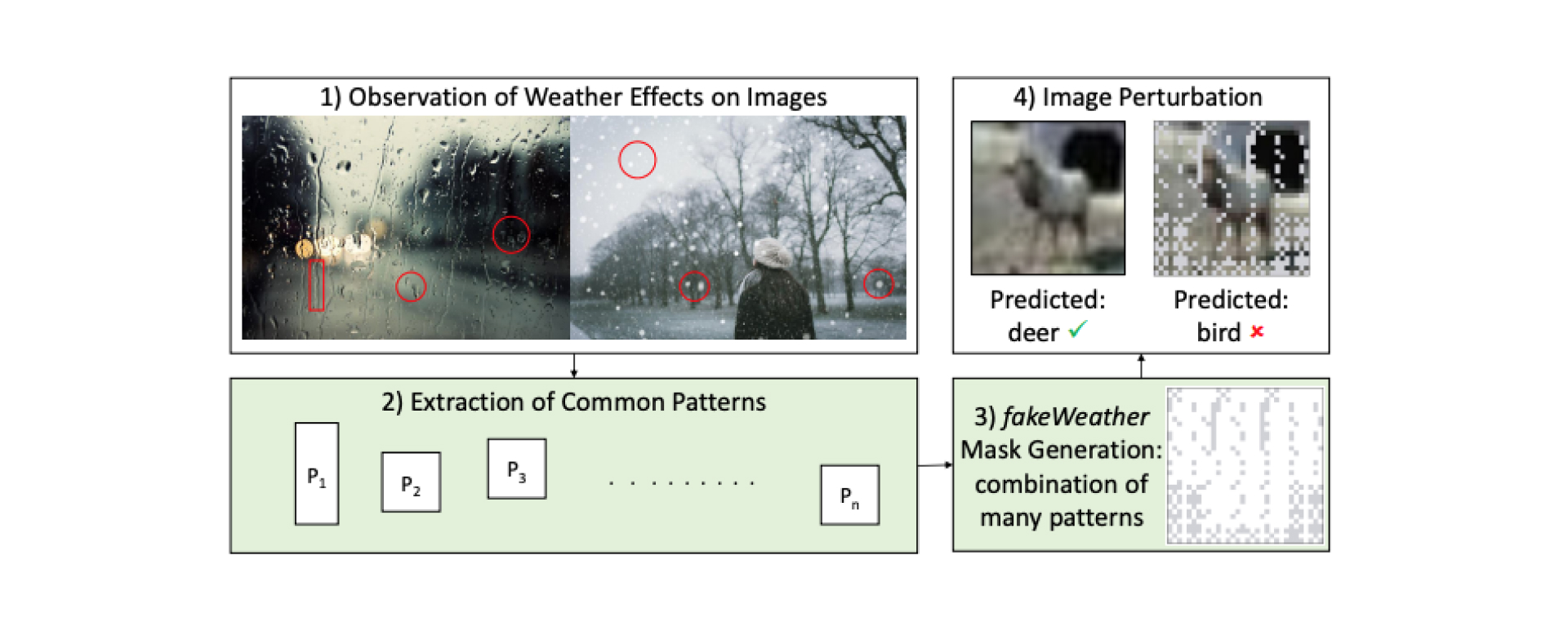

In this paper, researchers Alberto Marchisio, Giovanni Caramia,Maurizio Martina, and Muhammad Shafique presented fakeWeather adversarial attacks.

FakeWeather attacks emulate the natural weather conditions to mislead the DNNs. The researchers explored a series of images to see atmospheric perturbations on the camera lenses, and modelled a set of patterns to create masks faking the effects of rain, snow, and hail.

The perturbations stayed visible, while their presence remained unnoticed. It happened due to the association with natural events, which would be serious for autonomous cars. The attacks were tested on multiple Convolutional Neural Network and Capsule Network models, and the researchers reported noticeable accuracy drops in the presence of such adversarial perturbations.

This study introduces a new security risk for DNNs. Read the research by following the link in the title.

DL (Deep Learning) models are used in different applications such as autonomous driving and surveillance and they help with a lot of tasks including image classification, speech recognition, and playing games. It’s worth noting that DL models outperforms traditional machine learning techniques. Despite this success, they are criticized for vulnerabilities leading to adversarial and backdoor attacks.

Backdoor attack refers to the injection of a backdoor trigger into training data so that the model gives incorrect predictions when the trigger occurs. As a rule, backdoor attacks focus on image classification. However, this research is dedicated to backdoor attacks on object detection, which is of equal importance.

The authors of this research paper (Shih-Han Chan, Yinpeng Dong, Jun Zhu, Xiaolu Zhang, Jun Zhou) develop metrics and propose 4 kinds of backdoor attacks for object detection task:

To prevent these attacks, the researchers propose Detector Cleanse. This is an entropy-based run-time detection framework that helps to identify poisoned testing samples for any deployed object detector.

Read the research by following the link in the title.

Two main aspects of security are critical to the adoption of deep learning-based computer vision models, they are integrity that ensures the accuracy and trustworthiness of predictions, and availability, or prediction latency.

Most of the attacks shown were aimed at compromising the model’s integrity. No studies have explored attacks that compromise availability. Denial of service attacks are new category related to object detection.

In this paper, researchers Avishag Shapira, Alon Zolfi, Luca Demetrio, Battista Biggio, and Asaf Shabtai propose NMS-Sponge. This is a new approach, which damages the decision latency of YOLO, a state-of-the-art object detector. It compromises availability with the help of a universal adversarial perturbation (UAP). The UAP is able to enhance the processing time of individual frames by adding some objects while preserving the detection of the original objects.

Read the research by following the link in the title.

Safety and robustness have become topical in terms of developments in deep reinforcement learning for robot control, particularly for legged robots because they are able to fall.

Among disturbances that can influence legged robots are, undoubtedly, adversarial attacks. For legged robots, finding vulnerability to adversarial attacks can help to detect risks of falling.

In this study, researchers Takuto Otomo, Hiroshi Kera, Kazuhiko Kawamoto show that the adversarial perturbations to the torque control signals of the actuators can entail walking instability in robots.

They build black-box adversarial attacks that can be applied to legged robots regardless of the architecture and algorithms of deep reinforcement learning. Three search methods are used: random search, differential evolution, and numerical gradient descent methods.

Consequently, the joint attacks can be used for proactive diagnosis of robot walking instability. Read the research by following the link in the title.

The advance of DL-based language models are vital in the financial context. An increasing number of investors and machine learning models rely on social media like Twitter and Reddit to get real-time information and predict stock price movements. Text-based models are prone to adversarial attacks, however, stock prediction models with similar vulnerability are underexplored.

Researchers Yong Xie, Dakuo Wang, Pin-Yu Chen, Jinjun Xiong, Sijia Liu, Sanmi Koyejo explore different adversarial attack configurations to trick three stock prediction models. They consider the task of adversarial generation by solving combinatorial optimization issues with semantics and budget constraints.

The results demonstrate that the proposed attack method can achieve consistent success rates and cause significant monetary loss in trading simulation. For this purpose, it is required to join a perturbed but semantically similar tweet.

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.

Written by: admin

Secure AI Weekly admin

Knowledge about artificial intelligence and its security needs to be constantly improved How Do We Attack AI? Learn More at Our RSA Panel! Cloud Security Podcast by Google, June 6, ...

Adversa AI, Trustworthy AI Research & Advisory