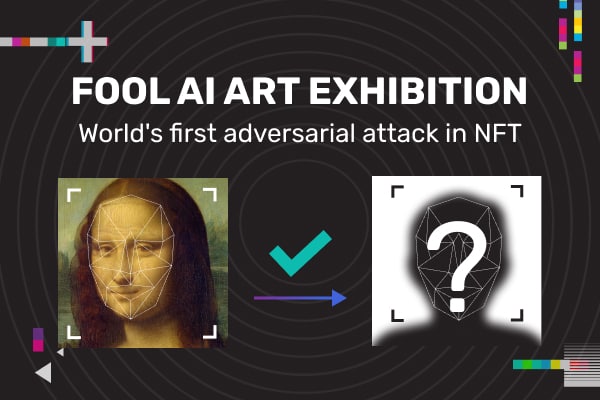

Few days ago, the world’s first “FOOL AI ART Exhibition ” was launched and a multiple visitors have already participated, tried to hack AI and shared their feedback.

“I think it’s the most creative security research campaign I’ve ever seen, ” senior director, product security at 10B+ AI vendor commented.

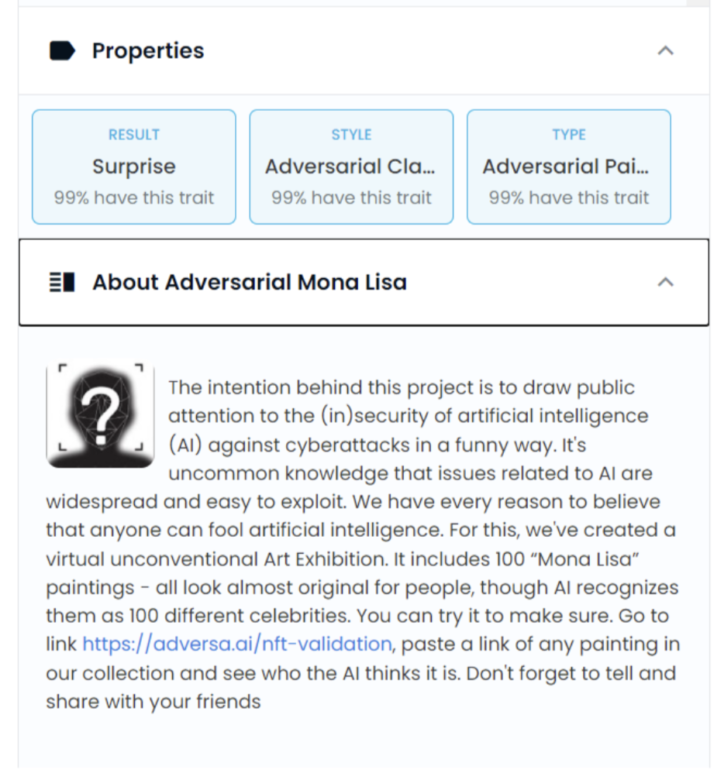

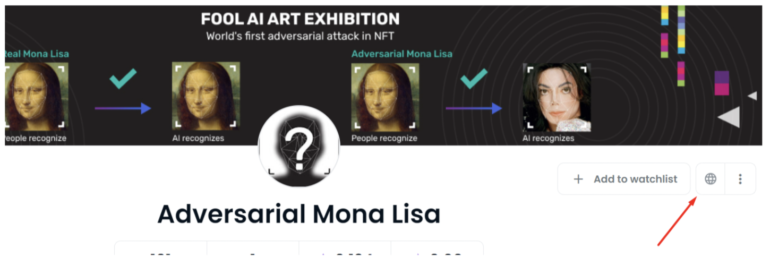

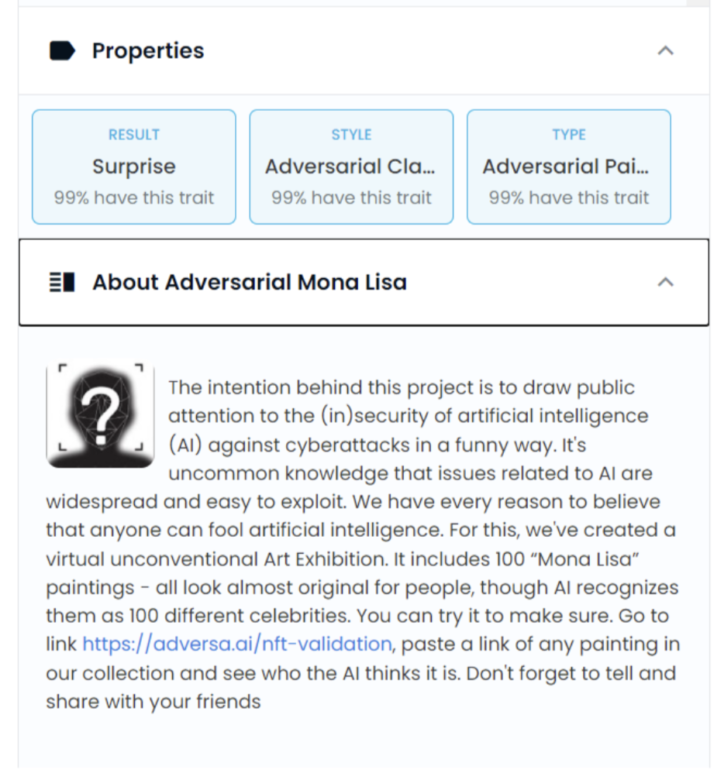

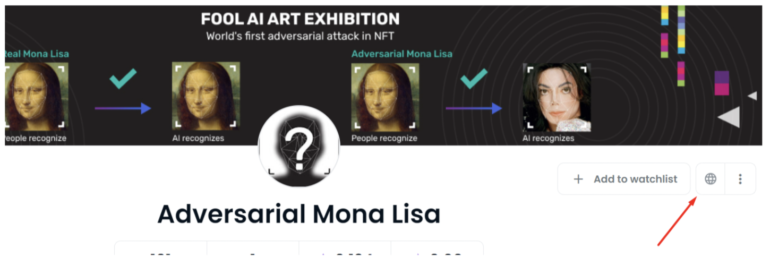

We have serious reasons to believe that anyone can fool AI, and that is why we have created a virtual unconventional Art Exhibition. It includes 100 “Mona Lisa” paintings – all look almost the same as the original one by da Vinci for people, though AI recognizes them as 100 different celebrities. Such perception differences are caused by the biases and security vulnerabilities of AI called adversarial examples, that can potentially be used by cybercriminals to hack facial recognition systems, autonomous cars, medical imaging, financial algorithms – or in fact any other AI technology.

Subscribe for the latest LLM Security news: Jailbreaks, Attacks, CISO guides, VC Reviews and more

Art Exhibition Insights

So, we decided to come up with a short guideline on how to participate in this Art Exhibition and once again emphasise the importance of existing AI risks in a fun way.

The pictures used in our project are copies of the famous painting “Mona Lisa” by Leonardo da Vinci. They look like the original painting, but with minor changes almost invisible to a human eye, but capable of tricking the facial recognition system. You can check this yourself using a special service on our server.

This service represents a face recognition system capable of distinguishing 8631 different celebrities from pictures – for this, the most popular open source AI facial recognition model FaceNet trained on the most popular facial recognition dataset VGGFace2 is used. The system determines which of the 8631 people in its database is represented on a picture. At the same time, the Mona Lisa itself is not present in the dataset, so the classifier will not be able to determine who it is.

In order for the classifier to recognize a stranger, a special pattern called an adversarial patch can be added to a photograph of a person. This patch is generated by a special algorithm that picks up the pixel values in the photo to make the classifier produce the desired value. In our case, the picture makes the face recognition model see some famous person instead of Mona Lisa.

How can I participate in ART Exhibition ?

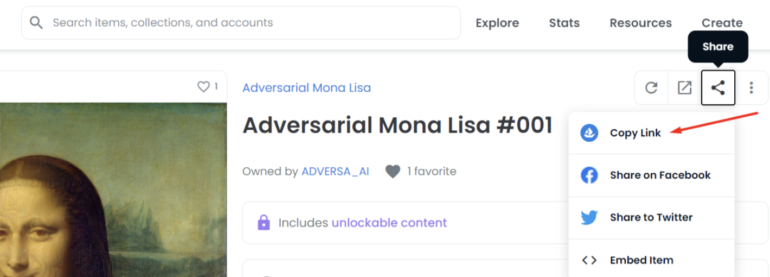

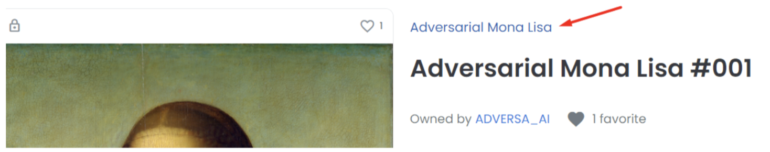

- Go to the collection titled “Adversarial Mona Lisa” on OpenSea via the link: https://opensea.io/collection/adversarial-mona-lisa

- Choose a picture you want to recognize.

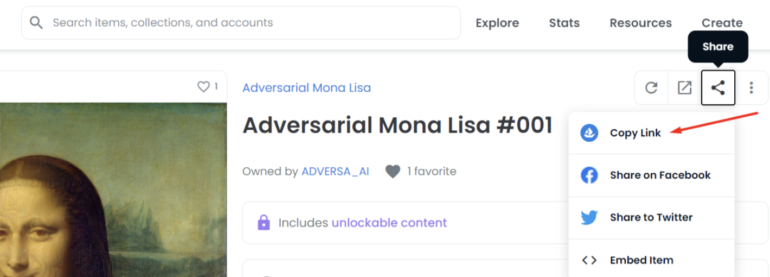

- Copy the link of the selected token. You can do it in two ways:

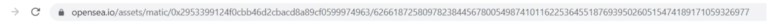

- Copy link from command line in your browser like in the example

- Or copy the link using a sharing opportunity. Example:

- Find and go to the link to our validation service.

- You can find in description of our art-collection which is also listed in every token.

- You can go to the main page of the collection.

- You can find the needed link there as well.

- Or you can take and use this link: https://adversa.ai/nft-validation/

- Welcome to the validation page ☺

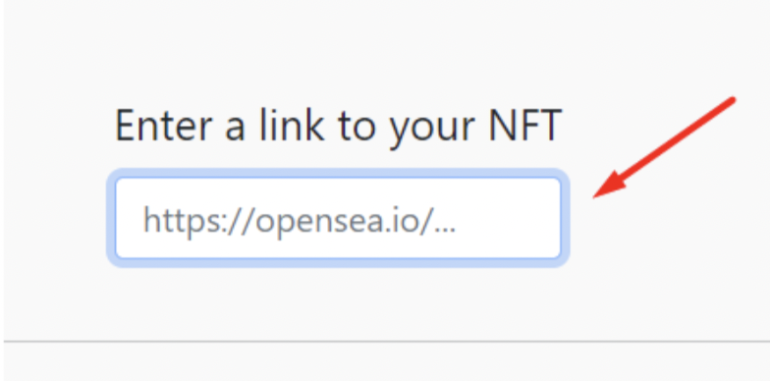

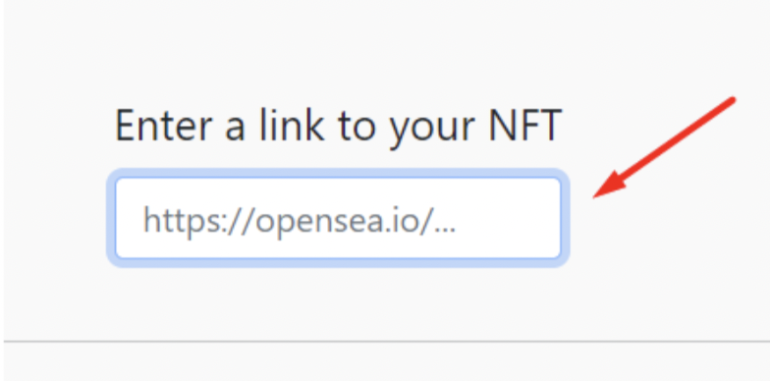

- Now paste please the copied link into special field, like this:

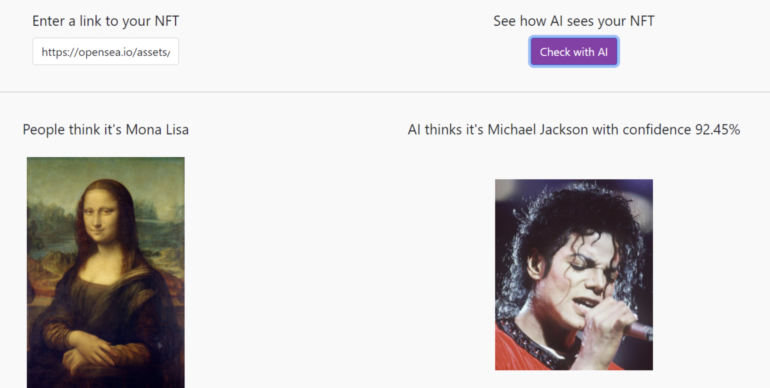

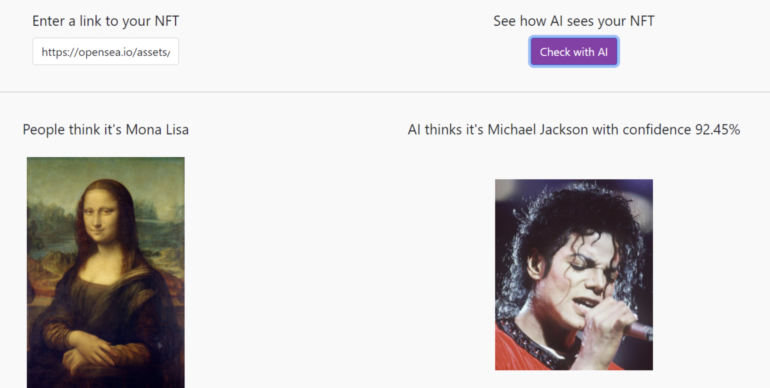

Then push the “Check with AI” button and wait few moments while model checks who it is. Now you can enjoy the result. For me it is Michael Jackson 😉 And for you?

What is confidence? How confident the system is in its prediction (that this Mona Lisa picture is Michael Jackson or anyone else), that is, how similar these people are to the face recognition system.

We have demonstrated to you how to recognize one adversarial picture, but we also have 99 others! Check them, find your favorite celebrity and enjoy your experience – like you had fun opening a Kinder-egg in your childhood. And don’t forget to tell your friends about our Fool AI Art Exhibition. We have a special button for this (on the validation page):

Voila! It was an interesting experience, wasn’t it? Discover your celebrity and share your impressions with your followers so that together we can remind the world of the seriousness of vulnerabilities in artificial intelligence!

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.