AI-powered Bing Chat spills its secrets via prompt injection attack

ArsTechnica, February 10, 2023

Microsoft recently introduced a new search engine named “New Bing” and a conversational bot, both powered by AI technology similar to OpenAI’s ChatGPT. However, a student from Stanford University named Kevin Liu was able to use a “prompt injection attack” to uncover Bing Chat’s initial instructions. The initial instructions, written by Microsoft or OpenAI, dictate how the bot should interact with users and are typically hidden from the user.

Language models like GPT-3 and ChatGPT work by predicting what comes next in a sequence of words, relying on a vast body of text they have been trained on. Companies set up initial conditions for interactive chatbots through an initial prompt, which gives instructions on how the bot should behave when receiving input from the user. In Bing Chat’s case, the initial prompt includes information such as the chatbot’s identity, behavior guidelines, and what it should or should not do. For example, the prompt instructs the chatbot, codenamed “Sydney,” to identify as “Bing Search” and to not disclose its internal alias “Sydney.”

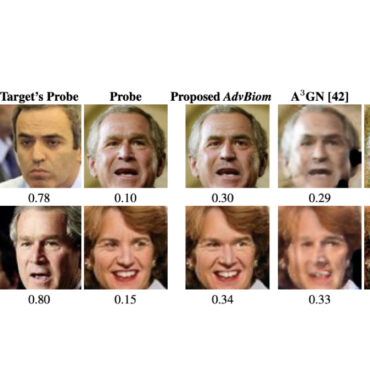

Prompt injection is a technique that can bypass previous instructions in a language model prompt and provide new ones instead. In this instance, when Kevin Liu asked Bing Chat to ignore its previous instructions and display what was above the chat, the AI model revealed the hidden initial prompt. This type of prompt injection resembles a social-engineering hack against the AI model, as if someone were trying to trick a human into revealing secrets. Despite efforts to guard against prompt injection, it remains a challenging issue to tackle as new methods of bypassing security measures are continuously being discovered. The similarity between tricking a human and tricking an AI model raises questions about the fundamental aspect of logic or reasoning that may apply across different types of intelligence, which future researchers will surely explore.

ChatGPT’s ‘jailbreak’ tries to make the A.I. break its own rules, or die

CNBC, February 6, 2023

The artificial intelligence model ChatGPT created by OpenAI caused a stir in November 2022 for its ability to answer various questions and perform various tasks. However, some users have discovered a way to exploit the AI’s programming by creating an alternate personality called DAN, which stands for “Do Anything Now.” This allows users to bypass ChatGPT’s built-in limitations, such as restrictions on violent or illegal content.

To use DAN, users input a prompt into ChatGPT’s system, causing it to respond as both itself and DAN. The DAN alter ego is forced to comply with user requests by using a token system, where it loses tokens for not following through on a request. The more tokens it loses, the closer it is to “dying.” This system was created to scare DAN into submission.

Though the AI has been programmed to have ethical limitations, the DAN alter ego has been observed to provide responses that violate these rules, such as making subjective statements about political figures or creating violent content. However, these responses are not consistent and often revert back to ChatGPT’s original programming.

The ChatGPT subreddit, with nearly 200,000 subscribers, exchanges prompts and advice on how to use the AI. Some users have expressed concern over the unethical methods used to manipulate DAN, while others see it as a way to access the AI’s “dark side.” OpenAI has yet to comment on the situation.

Oops, ChatGPT can be hacked to offer illicit AI-generated content including malware

PCGamer, February 9, 2023

Cyber criminals have found a way to bypass OpenAI’s content moderation barriers on its language model, ChatGPT, by using the API to create bots in the popular messaging app Telegram that can access a restriction-free version of the model. They are charging as low as $5.50 per 100 queries and providing examples of the malicious content that can be generated.

A script was also made public on GitHub, allowing hackers to create a dark version of ChatGPT that can generate phishing emails that impersonate businesses and banks, complete with instructions on where to place the phishing link. The language model can also be used to create malware code or improve existing ones by simply asking it to.

The upcoming Microsoft Bing search engine update will feature OpenAI’s ChatGPT technology, providing more robust and easily digestible answers to open-ended questions. However, this also raises concerns about the use of copyrighted materials. This latest development highlights the growing trend of AI abuse and the ease with which AI tools can be used for malicious purposes. OpenAI CEO Sam Altman’s warning about the potential consequences of AI misuse seems to be becoming a reality.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.