The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

Smart healthcare systems are gaining popularity thanks to IoT and wireless connectivity. However, adversarial attacks remain a big problem for them, and a variety of machine learning systems are trying to combat them. However, attacks can still lead to misclassifications, resulting in poor decisions such as false diagnoses. In this article, Arawinkumaar Selvakkumar, Shantanu Pal, and Zahra Jadidi discuss the different types of adversarial attacks and their impact on intelligent health systems. The paper proposes a model for studying how adversarial attacks affect machine learning classifiers.

The testing uses a medical imaging dataset and the presented model can classify medical images with high accuracy. The model is then attacked using the Fast Gradient Sign Method (FGSM) to misclassify. The VGG-19 model is trained using a medical dataset and then we inject the FGSM into a convolutional neural network (CNN) to study the significant impact. According to the study, the adversarial attack misclassifies images, resulting in model accuracy dropping from 88% to 11%.

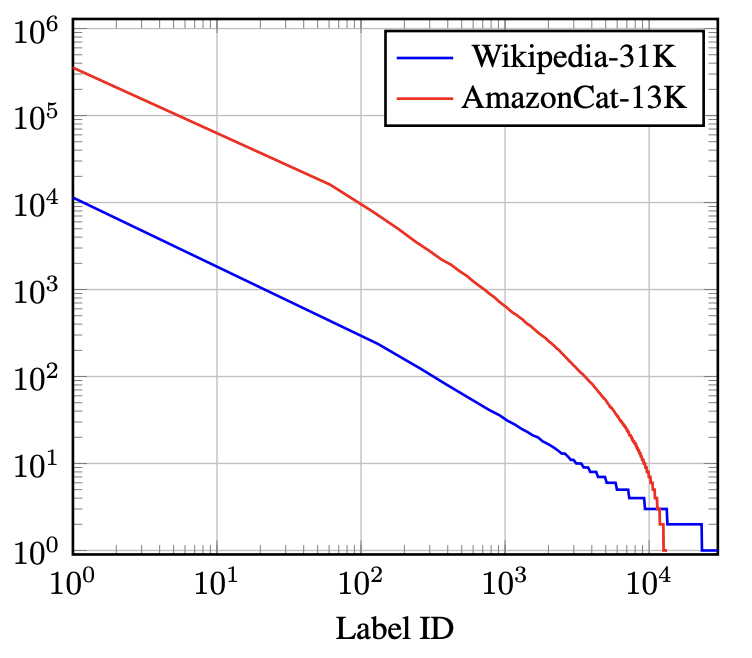

Extreme Multiple Label Text Classification (XMTC) is a text classification problem. It lies in the fact that the output space is extremely large, each data point can have several positive labels and the data follows a highly unbalanced distribution. Through the use of recommendation engine applications and automatic web-scale document tagging, XMTC research has focused on improving forecast accuracy and dealing with unbalanced data. However, the resilience of XMTC models to adversarial examples is largely underestimated, with model accuracy dropping from 88% to 11%.

This study examines the behavior of XMTC models in adversarial attacks by defining adversarial attacks in classifying text with multiple labels. Attacking text classifiers are distributed across several labels: with positive targeting, when the target positive label should fall out of k predicted labels, and with negative targeting. Experiments with APLC-XLNet and AttentionXML have shown that XMTC models are highly vulnerable to positive targeting attacks, but more resistant to negative targeting attacks.

The probability of successful attacks with positive targeting has an unbalanced distribution: tail classes are very vulnerable to adversarial attacks, for which adversarial patterns can be generated with great similarity to the actual data points. Researchers are studying the effect of rebalanced loss functions in XMTC, where they increase the resistance of these classes to resist attacks.

Automatic speech recognition systems are used for applications. However, with their help, systematic eavesdropping also occurs. Researchers are demonstrating a new way to disguise a person’s voice on the air of these systems without inconveniencing conversation between people in the room. Researchers introduce predictive attacks that achieve real-time performance by predicting an attack.

Even with real-time constraints, the proposed method affects the established DeepSpeech speech recognition system 4.17 times more than baseline and 7.27 times more, as measured by symbol error rate. This approach is practically effective in real conditions at physical distances.