Adversa at HITBSecConf 2021

Adversa participated onsite with an exhibitor booth in the HITBSecConf 2021 on 21-25 November. HITB, known for its cutting-edge technical talks and training in computer security, returned to Abu Dhabi, ...

Adversarial ML admin todayDecember 2, 2021 36

Bokeh is a natural phenomenon of shallow depth of field that blurs out-of-focus areas of a photograph, and people are used to seeing it in photographs. Due to the popularity of this effect, in this work it is considered from the point of view of adversariality. AdvBokeh, an adversarial attack based on this effect, can be aimed at injecting calculated deceptive information into bokeh generation and creating a natural adversarial example. At the same time, for the human eye, it will not arouse any suspicion.

Researchers Yihao Huang, Felix Juefei-Xu, Qing Guo, Weikai Miao, Yang Liu, and Geguang Pu propose to first produce a Depth Controlled Bokeh Synthesis Network (DebsNet) that can flexibly synthesize, refocus and adjust the bokeh level of an image in a one-step procedure. learning. With its help, it will be possible to connect to the bokeh generation process and attack the depth map. This map is needed to create realistic bokeh based on subsequent visual tasks.

In addition, a depth-based gradient attack is proposed to regularize the gradient. The adversarial examples in AdvBokeh also show a high level of portability in black box settings, and the maliciously crafted defocus blur images from AdvBokeh can be used to improve the performance of the SOTA defocus defocusing system, i.e. IFAN.

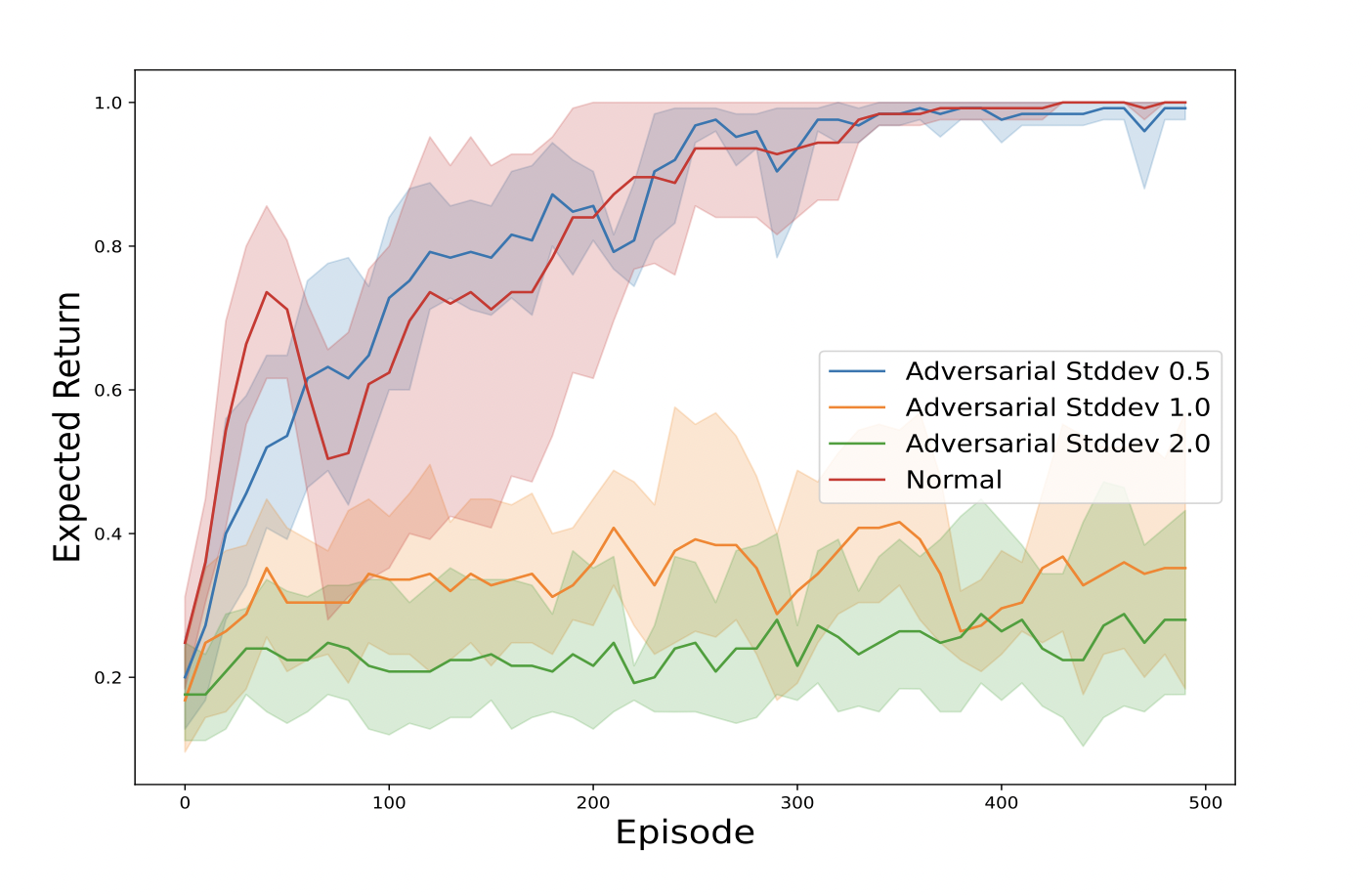

Single-agent reinforcement learning algorithms in a multi-agent environment are inappropriate for fostering collaboration: techniques that counteract non-cooperative behavior is necessary to facilitate the training of several agents, this is precisely the purpose cooperative AI.

This work by Ted Fujimoto and Arthur Paul Pedersen focuses on two main things. First, it questions the assertion that three algorithms inspired by human-like social intelligence introduces new vulnerabilities unique to cooperative AI that can be exploited by attackers. Second, the study presents an experiment showing that simple hostile agent disturbances beliefs can negatively affect performance. In turn, this demonstrates that formal representations of social behavior can be vulnerable to adversarial attacks.

Much effort has been made in recent years to attack and protect the area of 2D imagery, however, few methods investigate the vulnerability of 3D models. Existing 3D attackers usually perform point perturbations over point clouds, resulting in deformed structures or outliers. They are easy enough for humans to perceive, and their adversarial examples are generated when setting up a white box, which often suffers from low transmission success rates to attack remote black box models. In this paper, Daizong Liu, Wei Hu, we look at 3D point cloud attacks from two new and complex perspectives and demonstrate the new Imperceptible Transfer Attack(ITA).

Researchers pay attention to two of its characteristics. The first is stealth. The direction of the perturbation of each point along its normal vector to the surface of the neighborhood is constrained, leading to generated examples with similar geometric properties and thus enhancing stealth. The second parameter is portability. The new adversarial transformation model is designed to generate the most harmful distortions, and we apply adversarial examples to counter them, improving their portability to unknown black box models. In doing so, more robust 3D black box models are trained to defend against such ITA attacks by examining more legible point cloud representations. Extensive evaluations demonstrate that our ITA attack is more invisible and portable than existing modern ones.

Written by: admin

Company News admin

Adversa participated onsite with an exhibitor booth in the HITBSecConf 2021 on 21-25 November. HITB, known for its cutting-edge technical talks and training in computer security, returned to Abu Dhabi, ...

Adversa AI, Trustworthy AI Research & Advisory