The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

The development of deep neural networks has greatly influenced the progress in Multi-Object Tracking (MOT), in particular MOT trackers based on JDT (Joint-Detection-Tracking) are attracting special attention from specialists. While attacking the MOT is difficult because its mature association algorithms are designed to be robust against tracking errors, very little has been learned about security.

For this reason, in this paper, researchers Delv Lin, Qi Chen, Chengyu Zhou, and Kun He examine the vulnerabilities of JDT trackers and demonstrate a new adversarial attack technique called Tracklet-Switch (TraSw) against the full MOT tracking pipeline. A push-pull loss and a center leaping optimization were created to optimize adversarial examples for both re-ID feature and object detection. At the same time, TraSw is able to deceive the tracker so that it does not track targets in subsequent frames, attacking very few frames. This method has been tested on advanced depth trackers (eg FairMOT, JDE, ByteTrack) using MOT-Challenge datasets (eg 2DMOT15, MOT17 and MOT20), and. repeated experiments have confirmed the effectiveness of the method.

Deep learning-based facial recognition (FR) models have made significant strides in recent years – even with the wearing of medical masks due to the global pandemic. Not surprisingly, in this regard, much attention of researchers has been directed to the security issues of such systems, and attacks in the physical world have become a special stumbling block with their need to use a physical object that can arouse suspicion among others.

In this paper, researchers Alon Zolfi, Shai Avidan, Yuval Elovici, and Asaf Shabtai propose the Adversarial Mask.This is a physical adversarial universal perturbation (UAP) against modern FR models that is applied to face masks in a carefully crafted pattern. The experiment investigated the feasibility of porting a hostile mask to a wide range of FR model architectures and datasets, and validated the effectiveness of the adversarial mask in real-life experiments when printing an adversarial pattern onto a fabric medical face mask.

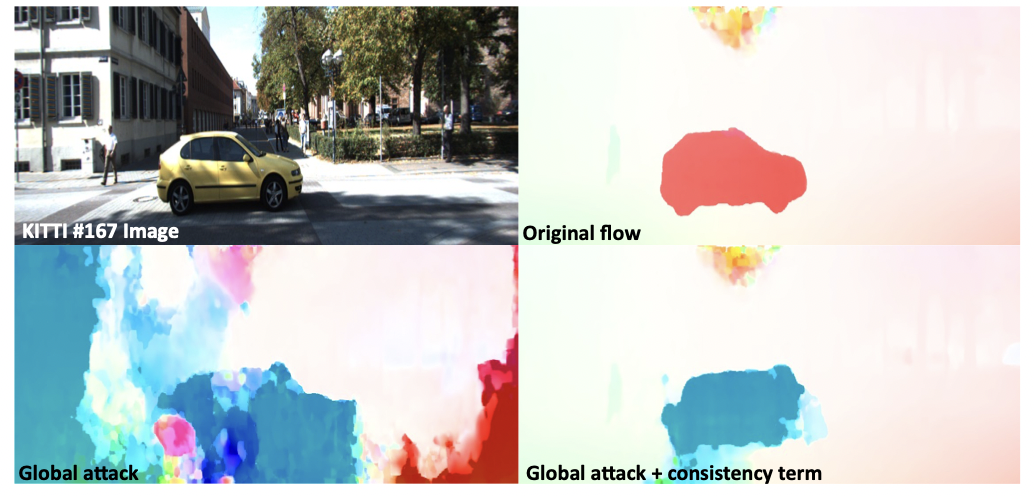

Researchers Tom Koren, Lior Talker, Michael Dinerstein, and Roy J Jevnisek present a new approach to semantically targeted malicious attacks against Optical Flow. This type of attack is aimed at distorting the prediction of the flow for a specific category or instance of an object, usually the attacker tries to hide malicious disturbances at the input, but a quick scan of the output helps to detect the attack. The method presented in this paper also helps to hide the intentions of attackers in the output: this is achieved through the term regularization, which promotes off-target consistency.

Extensive tests on leading optical flow models demonstrate the benefits of this approach in both white-box and black-box settings. In addition, the effectiveness of the attack on subsequent tasks, depending on the optical flow, is demonstrated.