The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

Intelligent Transportation Systems applications are often dependent on predicting traffic conditions. Recently, research on this issue has focused on multi-stage forecasting across the entire network, where all work is largely based on models based on graph neural networks. Such models have also demonstrated their vulnerability to adversarial examples.

In this paper, Bibek Poudel and Weizi Li propose an adversarial attack structure where the model is considered as a black box. It is also assumed that an attacker can oracle a forecasting model with any input and obtain a certain result. An attacker can train a replacement model using input-output pairs and generate opposing signals.

To test the effectiveness of the attack, two modern models based on neural networks with graphs (GCGRNN and DCRNN) were investigated, as a result of which the forecast accuracy of the target model can decrease to 54%. For comparison, two standard statistical models are also examined, which either turn out to be slightly influenced (less than 3%), or are immune to enemy attacks.

Modern DNNs are deeply vulnerable to adversarial attacks and backdoor attacks. Backdoor models deviate from expected behavior on inputs with predefined triggers, while performance for clean data is not affected.

This paper by M. Caner Tol, Saad Islam, Berk Sunar, and Ziming Zhang explores the viability of backdoor injection attacks in real DNN deployments on hardware and addresses such practical problems in hardware implementation from a new optimization perspective.

Memory vulnerabilities are very rare and device-specific and rarely distributed, so the researchers propose a new network learning algorithm based on constrained optimization for a realistic backdoor attack on hardware.

By uniformly changing the parameters of the convolutional and fully connected layers, and also by optimizing the launch pattern together, modern attack performance with fewer bits is achieved. For example, the method on a hardware-deployed ResNet-20 model trained on CIFAR-10 can provide test accuracy over 91% and a 94% success rate by flipping only 10 bits out of 2.2 million bits.

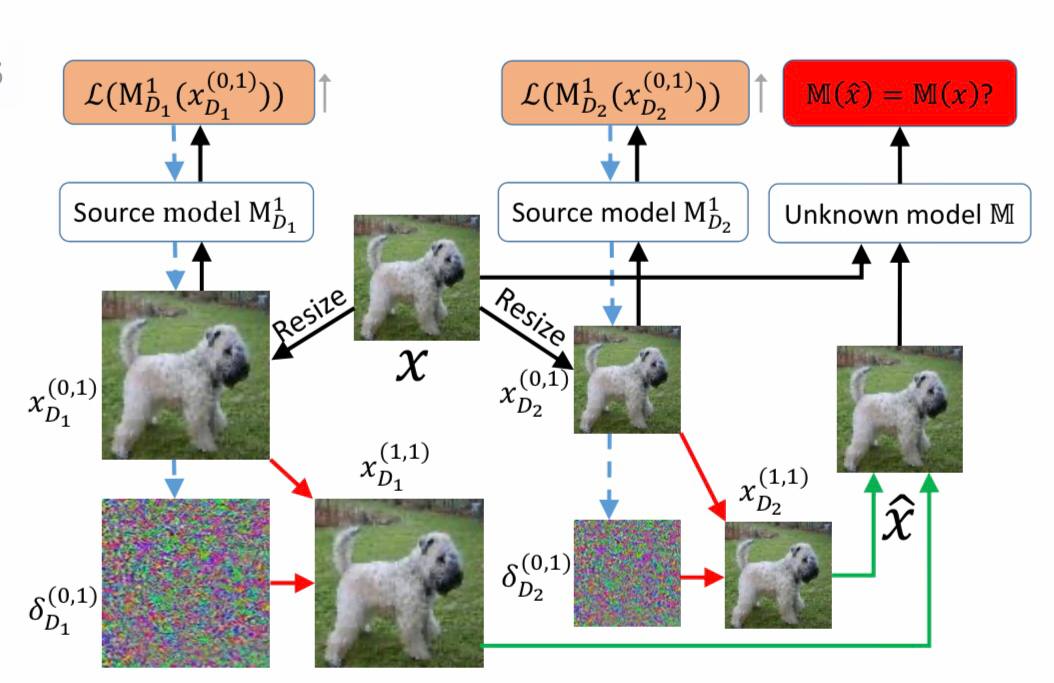

Deep neural networks (DNNs) have been observed to be vulnerable to transmission attacks in a no-query black box setup, with all previous research on transmission attacks concluding that white box surrogate and black box victim models are trained on the same dataset. Thus, the attacker implicitly knows the set of labels and the input size of the victim’s model. In practice, however, this is not as realistic as the attacker may not know the dataset used by the victim’s model and needs to attack any accidentally discovered images that might not come from the same dataset.

Therefore, the researchers define a new generalized portable attack (GTA) problem, which assumes that the attacker has a set of surrogate models trained on different datasets, and none of them is equal to the victim’s model dataset. Thus, a new method called Image Classification Eraser (ICE) is proposed for erasing classification information for any detected images from an arbitrary dataset. Numerous experiments with Cifar-10, Cifar-100 and TieredImageNet demonstrate the effectiveness of the proposed ICE for solving the GTA problem, and the existing transmission attack methods can be modified to solve the GTA problem, but with significantly worse performance compared to ICE.