The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

Probably many have already heard about the unethical applications DeepFake and DeepNude. They came about by creating high quality image-to-image conversion (Img2Img) in GAN. This unethical use of technology poses a reactive threat to society.

In their research, Chin-Yuan Yeh, Hsi-Wen Chen, Hong-Han Shuai, De-Nian Yang, and Ming-Syan Chen solve the problem with the Limit-Aware Self-Guiding Gradient Sliding Attack (LaS-GSA) that follows the Nullifying Attack to reverse the img2img conversion process in the black box settings. The researchers also introduce constrained-free random grading and a gradient sliding mechanism to estimate a gradient that meets the adversarial limit, that is, the constraints of the pixel values from the adversarial example. Numerous experiments show that LaS-GSA requires fewer requests to zero out the image conversion process, with higher success rates than the 4 available black box methods.

It’s no secret that modern deep networks are susceptible to malicious attacks. However, most common methods fail to generate human-readable hostile disturbances and therefore pose limited threats to the physical world.

For this reason, Stephen Casper, Max Nadeau, and Gabriel Kreiman have developed function-level adversarial perturbations using deep image generators and a new optimization target calling them feature-fool attacks. This was done with the aim of studying the associations of feature classes in networks and better understanding the real threats they face. The study proves that these studies are universal and use them to create targeted feature-level attacks at the ImageNet scale that are both interpretable, universal for any source image, and physically realizable.

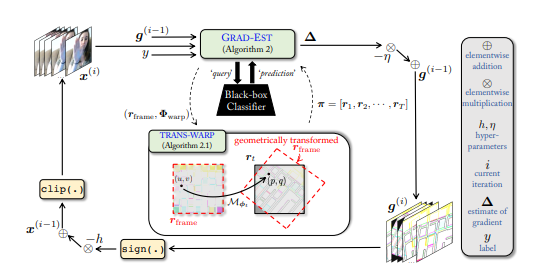

Compared to image classification models that have attracted a lot of research attention, adversarial black box attacks on video classification models have been little studied to date. This may be due to the fact that with video the temporal dimension creates significant additional problems in the estimation of the gradient. Efficient query black box attacks rely on efficiently estimated gradients to maximize the likelihood of misclassification of the target video.

In this work, researchers Shasha Li, Abhishek Aich, Shitong Zhu, M. Salman Asif, Chengyu Song, Amit K. Roy-Chowdhury, and Srikanth Krishnamurthy show that such effective gradients can be sought by parametrizing the temporal structure of the search space using geometric transformations. More specifically, experts are working on a new iterative Geometric TRAnsformed Perturbations (GEO-TRAP) algorithm to attack video classification models: this becomes possible as GEO-TRAP uses standard geometric transformation operations to reduce the search space for effective gradients to find a small group of parameters that define these operations. Essentially, the algorithm results in successful perturbations with surprisingly few requests. The algorithm identifies the vulnerabilities of various video classification models and provides new, up-to-date results when setting up a black box on two large datasets.