The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

Machine learning models can easily be confused with blind spots or common deceits. Digital “stickers,” also called adversarial patches, can interfere with facial recognition, surveillance systems, and self-driving cars, although most of these patches can be neutralized fairly easily using a classification network, or an adversarial patch detector. It can distinguish adversarial patches from original images.

This detector can work with the types of objects in the image, for example, distinguishing one object from another, and it also marks the boundaries of objects by drawing so-called bounding boxes around them. However, such detectors require training to work best. To this end, the researchers Zijian Zhu, Hang Su, Chang Liu, Wenzhao Xiang, and Shibao Zheng have developed a new approach, the Low-Deteable Adversarial Patch, that can attack an object detector with small, texture-matched adversarial patches, making it less likely to recognize these attackers.

The method uses several geometric primitives to model the shapes and positions of spots. Also, to improve attack performance, researchers assign different weights to the bounding boxes in terms of the loss function. Experiments with the general COCO detection dataset as well as the D2-City driving video dataset demonstrate that LDAP has proven to be highly effective.

Adversarial examples are a big threat for modern object detectors, which jeopardizes all the applications where they are used.

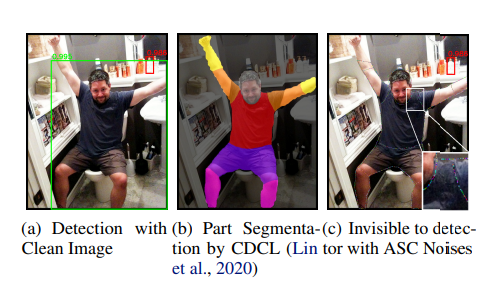

The ℓ0-attack refers to attacks regulated by the rate p and is aimed at changing as few pixels as possible. However, the problem in this case is not as simple as it seems, since it is usually necessary to optimize the shape and texture at the same time, which is an NP-hard task. To solve this problem, the researchers Yichi Zhang, Zijian Zhu, Xiao Yang, and Jun Zhu propose a new method, Adversarial Semantic Contour (ASC), guided by the contour of the object. Thus, the search space is reduced in order to speed up the optimization of ℓ0, and more semantic information is introduced, which should affect the detectors more.

This optimizes the selection of the changed pixels by sampling and their gradient descent colors in turn. According to repeated experiments, the proposed method outperforms the most commonly handcrafted templates in this matter. The proposed ASC can successfully mislead basic object detectors including SSD512, Yolov4, Mask RCNN, Faster RCNN, etc.

In this paper, researchers Inderjeet Singh, Satoru Momiyama, Kazuya Kakizaki, Toshinori Araki present a new method for creating adversarial examples against face recognition systems (FRS).

An adversarial example (AX) is an image with deliberately generated noise, aimed at deceiving the attacked system, as a result of which it makes incorrect predictions. The axes obtained using the proposed method remain stable under real changes in brightness. The new method performs non-linear transformations of luminance by applying the concept of teaching the curriculum during the attack generation procedure. This method has been demonstrated to be superior to traditional methods of complex experimental research in the digital and physical world, and it also allows in practice to assess the risks of FRS versus AX, independent of brightness.