The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

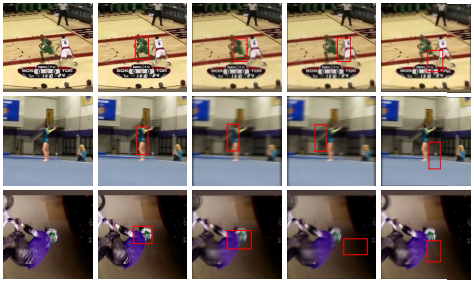

Visual object tracking can be greatly complicated by moving of the object or camera which causes so-called motion blur. In this paper, researchers Qing Guo, Ziyi Cheng, Felix Juefei-Xu, Lei Ma, Xiaofei Xie, and others examine the robustness of visual object trackers against motion blur in the context of an adversarial blur attack (ABA) aiming to online transfer input frames to their motion-blurred counterparts and to fool trackers at the same time.

As part of the attack,adversarial motion blur perturbations are generated on the images or video frames, which misleads the object detection systems and allows a moving object to stay undetected.

Adversarial training has been shown to be effective in increasing the resistance of image classifiers to white box attacks. However, in the case of effectiveness against black box attacks, things are not so obvious.

In this paper, Ali Rahmati, Seyed-Mohsen Moosavi-Dezfooli, and Huaiyu Dai demonstrate that a number of geometric consequences of adversarial training on the decision boundary of DNs can, on the contrary, facilitate some types of black box attacks. Researchers define a robustness metric and show that it may not translate into such a good robustness gain against the decision-based black-box attacks. In addition, the authors draw attention to the fact that in comparison with the regular ones, even white box attacks converge faster against adversarially-trained NNs.

Deep Neural Networks have proven themselves to be highly effective in a wide variety of ML applications. However, it is also known that DNNs are still highly vulnerable to simple adversarial perturbations that affect performance.

In this study, Gihyuk Ko and Gyumin Lim propose an innovative method for identifying adversarial examples using methods developed to explain the model’s behavior. According to the researchers, it was noticed that indignations imperceptible to the human eye can lead to significant changes in the model explanations, which in turn leads to uncommon forms of explanations. Based on this finding, the researchers propose unsupervised detection of adversarial examples implementing reconstructor networks trained only on model explanations of harmless examples. According to the researchers, the method has demonstrated high efficiency in detecting adversarial examples generated by the supreme algorithms and is the first study of its kind with an unsupervised defense method using model explanations.