The Adversa team makes for you a selection of the best research in the field of artificial intelligence and machine learning security for August 2022.

Subscribe for the latest AI Security news: Jailbreaks, Attacks, CISO guides, and more

Modern text-guided image generation models such as DALL-E 2, Imagen, and Parti show great achievements in generating images in any visual domain and any style. However, such developments and technologies also raise ethical issues when misused. For example, such image generation models can be used to create offensive or harmful visual content. For this reason, access to large text-guided image generation models is limited, and the content policies implemented in the prompt filters also moderate their use.

In this paper, Raphaël Millière researched at how text-guided image generation models can be queried using nonces adversarially designed to reliably invoke certain visual concepts. Raphaël Millière introduced two approaches for a such generation:

– Macaronic prompting. It is the development of inscrutable hybrid words by combining units of different languages’ subwords;

– Evocative prompting. Represents the creation of one-time words whose broad morphological characteristics are sufficiently similar to those of existing words to evoke robust visual associations.

Moreover, these methods can be combined to create images related to particular visual concepts. And at the moment there is a serious issue about the possible consequences of using these methods to outmaneuver already existing approaches to content moderation.

Automated Grammatical Error Correction (GEC) systems are gaining more and more popularity. They are often used, for example, to automatically measure an aspect of a candidate’s fluency using speech transcription as a form of evaluation and feedback – the number of edits from input to the corrected GEC output sentence is indicative of the candidate’s language ability, where fewer edits suggest better fluency.

GEC systems based on deep learning are very powerful and accurate. And they are also sensitive to possible attacks. If a small concrete change is introduced into the input of the system, the output can be greatly adversely changed.

In this paper, Vyas Raina and Mark Gales showed how to trick the GEC system into hiding errors and get a perfect fluency score using universal adversarial attacks.

For the model developer, the transparency of the black box behavior is provided by the explanation of the model, indicating the influence of the input attributes on the prediction of the model. But, such dependence of explanations on input creates risks of confidentiality of sensitive user data, which, unfortunately, has not been studied much yet.

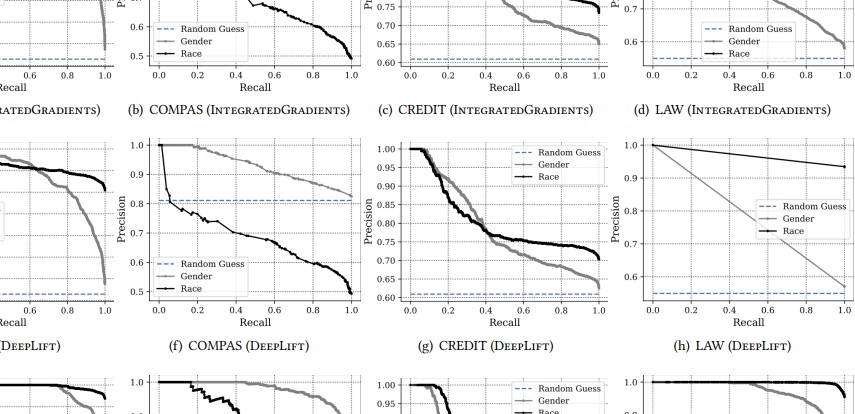

Vasisht Duddu and Antoine Boutet in their paper focused on the privacy risk associated with an attribute inference attack, that is, when an attacker can infer sensitive attributes of user input given the explanation of the model. The researchers developed the first attribute inference attack against model explanations in two threat models and evaluated it on four reference datasets and four state-of-the-art algorithms. Researchers have shown that the value of sensitive attributes can be successfully inferred from explanations in both threat models, and even that an attack is successful when using only explanations that match sensitive attributes.

The researchers concluded that model explanations are a strong attack surface that an adversary can exploit.

The attribute inference attack is one of the least explored, so this is a great article to get an idea of the new ways to use it in different threat models.

The use of AI and ML-based methods worldwide is increasing. But along with this growth, the threats of the attackers are also growing.

To determine the system’s vulnerability, a AI Red team is needed, which will be able to identify potential threats, describe the properties aimed at increasing the system’s reliability, and develop effective protection tools. Information sharing between model developers, AI security professionals, and other stakeholders is also essential to building a robust AI model.

Chuyen Nguyen, Caleb Morgan, and Sudip Mittal have created and described the prototype CTI4AI system to cover the need to methodically detect and share AI/ML-specific vulnerabilities and threat information.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.