Carrying out vulnerabilities in machine learning models as part of the study is necessary for further successful work on potential attacks and defenses. And here is a selection of the most interesting studies for July 2022.

Why is it important? A few examples are demonstrated when we were starting to see the combinations of attacks on AI. In classical systems, it is not the news, in the latest security competitions like CanSecWest researchers use a chain of more than 10 vulnerabilities to break Browser or OS, but for AI it is relatively new. As a rule, information disclosure attacks are used to understand the model to exploit it using more precise adversarial examples. But here it is vice versa, adversarial examples used to conduct information disclosure attacks.

The rise in computing power and the collection of huge datasets are supporting this change. Since datasets often contain personal data, there is a threat to privacy. A new line of research aimed at recovering training data is called a membership inference attack. This paper is about a new way for conducting it.

In this article, Hamid Jalalzai, Elie Kadoche, Rémi Leluc, and Vincent Plassier developed a tool to measure training data leakage. They demonstrated how to use the amount that appeared as a measure of the total variation of a trained model next to its training samples. Moreover, the researchers provided a new defense mechanism, which was supported by empirical data from convincing numerical experiments.

5G is a relatively new category of applications in terms of adversarial attacks, most of research papers focused on computer vision, audio and text data. But recently it was successfully transferred to almost every domain. Now even 5G. AI exists in such places where you don’t expect it and that’s why security of AI is essential.

Machine learning is the main asset of 5G networks, as there are insufficient human resources to support several billion devices while maintaining optimal quality of service (QoS).

Everyone is aware of machine learning vulnerabilities, but 5G networks are subject to another separated type of adversarial attacks, as shown in the work of Giovanni Apruzzese, Rodion Vladimirov, Aliya Tastemirova, and Pavel Laskov.

In the paper, the researchers demonstrated a new model of adversarial machine learning threats that was suitable for 5G networks and did not require compromise of the target 5G system. They proposed a framework for machine learning security assessments based on public data. The threat model itself was pre-evaluated for six machine learning applications envisaged in 5G. They have an impact on learning stages and inference stages. Accordingly, they could reduce system performance and have a lower entry barrier.

Graph neural networks is the next step in neural networks research and backdoor attacks is the next step in attacks on AI. Now you see those tho combined in one paper. Noteworthy is the fact that these attacks are transferable which makes them even more serious.

Graph Neural Networks (GNNs) have achieved overwhelming success in graph mining tasks. In doing so, they benefit from a message passing strategy that combines the local structure and functions of nodes to better learn the graph representation.

It is not a surprise, GNNs as well as other types of deep neural networks are vulnerable to imperceptible perturbations in both graph structure and node characteristics.

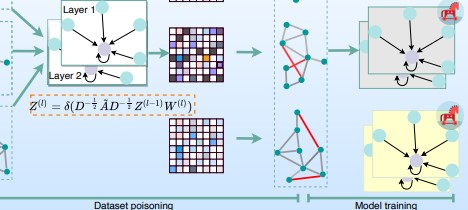

In their article, Shuiqiao Yang, Bao Gia Doan, Paul Montague, Olivier De Vel, Tamas Abraham, Seyit Camtepe, Damith C. Ranasinghe, and Salil S. Kanhere showed a Transferable GRAPh backdoor attack (TRAP). Its essence is to poison the training dataset with perturbation-based triggers that can lead to an efficient and portable backdoor attack.

The TRAP attack differs from previous work. For example, it uses a graph convolutional network (GCN) surrogate model to generate perturbation triggers for a black box attack. It also generates sample-specific perturbation triggers that do not have a fixed pattern. For the first time, the attack is transferred on different GNN models when trained using a fake poisoned training dataset.

In their work, the researchers showed the effectiveness of the TRAP attack to create portable backdoors in four different popular GNNs using four real datasets.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.