The Adversa team makes for you a selection of the best research in the field of artificial intelligence and machine learning security for October 2022.

Subscribe for the latest AI Security news: Jailbreaks, Attacks, CISO guides, and more

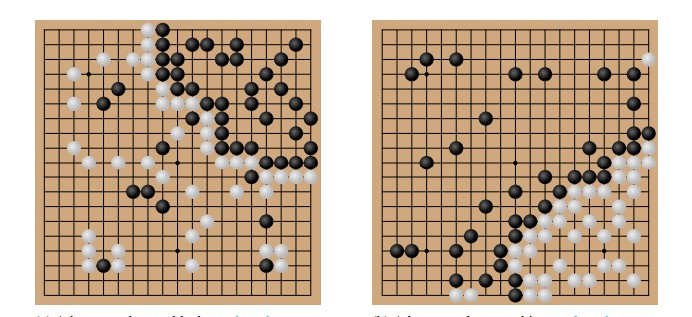

Now humans can win GO against AI’s again.

Tony Tong Wang, Adam Gleave, Nora Belrose, Tom Tseng, Joseph Miller, Michael D Dennis, Yawen Duan, Viktor Pogrebniak, Sergey Levine, and Stuart Russell have attacked KataGo, a modern AI system for playing Go, by learning adversarial policies that plays against a frozen KataGo victim. The attack created by the researchers is the first successful end-to-end attack against Go AI playing with the highest level of human skill.

The attack they designed achieved almost 100% gain against KataGo without using search, and more than 50% gain – with search. It is worth noting that the opponent wins by cheating KataGo, namely, forcing the game to end prematurely at a time when it is advantageous to the opponent. The results of the authors presented in the research paper show that unexpected failures can be observed even in professional-level artificial intelligence systems.

Taking as an example KataGo, the most powerful public AI system for playing Go, the self-playing game with very high scores, the researchers tried to answer the question: Are adversarial policies a vulnerability of self-play policies in general, or simply an artifact of insufficiently capable policies?

Whisper ASR, the latest automatic speech recognition model, was recently released. This model was trained on large amounts of controlled data. To increase the robustness of the model, the authors of the model trained it exclusively on their own dataset, without any augmentation. And this paid off: the model had an impressively high level of robustness to out-of-distribution data and random noise.

In their work, researchers Raphael Olivier, and Bhiksha Raj showed that this level of robustness did not extend to adversarial noise. They were able to generate small input perturbations, resulting in Whisper’s performance degrading drastically, as well as being able to decipher the target sentence of their own choosing.

Whisper is open source, which means that the presence of model vulnerabilities can have real negative and severe consequences when the model is deployed in real applications.

To predict city-wide traffic, machine learning models are used, which in turn use complex spatiotemporal autocorrelations. These methods are built on the conditions of a reliable and unbiased forecasting environment, which is not readily available in the real world.

Researchers Fan Liu, Hao Liu, and Wenzhao Jiang analyzed the vulnerability of AI-driven spatiotemporal traffic prediction models to adversarial attacks and proposed a practical structure for the adversarial spatiotemporal attack. In their paper, the researchers provided an example of an iterative gradient-driven node salience method to determine a time-dependent set of victim nodes instead of simultaneously attacking all geographically dispersed data sources.

Their paper shows that with adversarial learning using the attacks they propose, it is possible to increase the reliability of spatiotemporal traffic prediction models.

Metaverse provides a great experience for communicating, working, and playing in the self-sustaining and hyperspace-time virtual world. Advances in various technologies such as augmented reality, virtual reality, extended reality (XR), artificial intelligence (AI), and 5G/6G communications are key enablers of AI-XR Metaverse applications. All together, it tells us that Metaverse is a new paradigm for the next generation of the Internet.

All innovations in technologies bring with them new issues of ensuring the safety of their use. So here as well, AI-XR Metaverse applications carry the risk of unwanted activities that can undermine user experience, privacy, and security, thereby putting lives at risk.

In their world first review in AI risks for Metaverse, researchers Adnan Qayyum, Muhammad Atif Butt, Hassan Ali, Muhammad Usman, Osama Halabi, Ala Al-Fuqaha, Qammer H. Abbasi, Muhammad Ali Imran, and Junaid Qadir analyzed the security, privacy, and reliability aspects associated with the use of various AI methods in applications of the AI-XR Metaverse, and provided a classification of potential solutions that could be used to develop secure, private, trustworthy, and secure AI-XR applications. Nonetheless, some questions remain open and require further research.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.