Carrying out attacks on machine learning models as part of the study is necessary for further successful work on potential vulnerabilities. And here is a selection of the most interesting studies for January 2022.

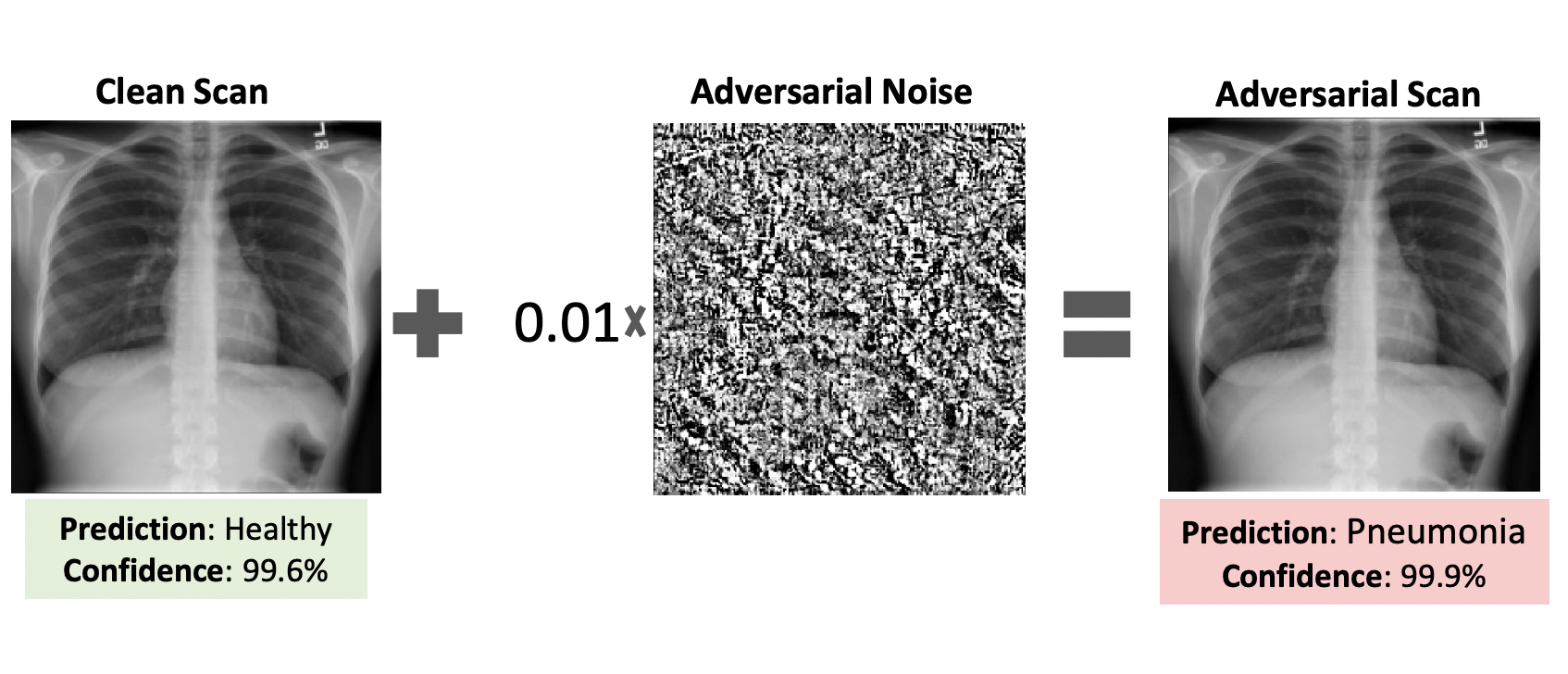

Deep learning is currently being successfully applied in the field of medical image analysis by medical professionals and healthcare providers. Such systems work on the basis of deep neural networks (DNN). It has been proven that DNNs are vulnerable to hostile sampling: images with subtle changes of a few pixels can change the result of the model’s prediction. Researchers have already proposed several means of protection. They can either make the DNN more reliable, or they can detect hostile samples before they affect smart systems.

However, none of these papers consider an informed attacker able to adapt to the defense mechanism y – the new study by Moshe Levy, Guy Amit, Yuval Elovici, and Yisroel Mirsky demonstrates that an informed attacker can bypass five modern defenses. In doing so, the hacker needs to successfully fool the victim’s deep learning model, rendering these defenses useless. Researchers are also suggesting better alternatives for protecting medical DNNs from such attacks. For example, this can be done by hardening the security of the system and using digital signatures.

The Open Radio Access Network is a new, open, adaptive and intelligent RAN architecture inspired by the success of artificial intelligence in other areas. The system uses machine learning to automatically and efficiently manage network resources for various use cases such as traffic management, QoS prediction, and anomaly detection. Such systems have a number of vulnerabilities: they are characterized by a special type of logical vulnerabilities that arise due to the inherent limitations of learning algorithms. In this case, an attacker can use adversarial machine learning.

In a new study by Ron Bitton, Dan Avraham, Eitan Klevansky, Dudu Mimran, Oleg Brodt, Heiko Lehmann, Yuval Elovici, and Asaf Shabtai presented a systematic analysis of AML threats for O-RAN. The researchers considered relevant use cases for machine learning and analyzed various scenarios for deploying machine learning workflows in O-RAN, and then defined the threat model. Potential adversaries were identified, their opposing capabilities were listed, and their main goals were analyzed. In addition, experts have investigated various AML threats in the O-RAN and discussed a large number of attacks that can be performed to materialize these threats. At the end of the study, an AML attack on an adversarial machine learning traffic management model was also demonstrated.

By now, end-to-end neural machine translation (NMT) has achieved great popularity. Noisy input usually causes models to become unstable, but generating adversarial examples as padded data has shown good results against this problem. Existing AEG methods are at the word or character level, and in a new study, Juncheng Wan, Jian Yang, Shuming Ma, Dongdong Zhang, Weinan Zhang, Yong Yu, and Furu Wei demonstrate a new phrase-level adversarial example generation (PAEG) method. This was done to improve the reliability of the model, as the method uses a gradient-based strategy to replace vulnerable position phrases in the original input.

The method has been validated against three benchmarks, including Chinese-English LDC, German-English IWSLT14, and English-German WMT14. According to the results of the experiments, the approach significantly improves performance compared to previous methods.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.