Deepfakes are posing a real threat to the ethical side of AI

What Happens When AI Falls Into The Wrong Hands?

Forbes, November 18, 2021

AI has spread to almost all areas of human life, and its potential is truly enormous. However, as is the case with any other technology, AI can become a deadly weapon once it falls into the hands of intruders.

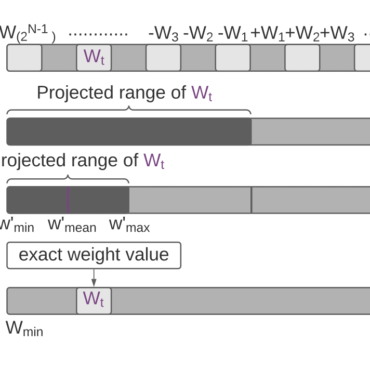

And at this moment, artificial intelligence can be turned against all the same defense systems that were created with its help. To avoid detection, adversarial AI attacks leverage the analytic and decision-making capabilities of established machine learning-based security tools; in doing so, it manages to outsmart less advanced machine learning technology by convincing these security tools that this AI-based malware is harmless.

Adversarial AI attacks themselves can be divided into three main types: AI-based cyberattack, AI-facilitated cyberattacks and adversarial learning.

Although adversarial AI has not yet become widespread, isolated experimental cases are already happening – in fact, advanced technologies can fall into the hands of cybercriminals at any time. According to experts, the situation could significantly worsen in the next year and a half, and then untrained organizations will face attacks that undermine traditional security solutions.

Healthcare IT News, October 28, 2021

The principles define the areas in which International Standards Organizations and other collaborative groups can promote what is called good practice in machine learning.

The U.S. Food and Drug Administration presented a list of “guiding principles” aimed at promoting the safe and effective development of AI-based medical devices. Both the FDA and its U.K. and Canadian counterparts commented that the principles were intended to form the foundation for Good Machine Learning Practice. “As the AI/ML medical device field evolves, so too must GMLP best practice and consensus standards,” explained the agency.

The FDA emphasizes that artificial intelligence and machine learning technologies can radically expand the healthcare industry, but there are a number of challenges to using them. In doing so, the ten guiding principles define the main points on which international standards organizations and other joint bodies can work to promote the GMLP. Stakeholders can use these principles to adapt and adopt best practices from other sectors for use in the health technology sector, and new practices can be based on them.

Gizmodo, November 19, 2021

The study involved 7,000 people and showed that this nascent technology may have its limits.

Deepfakes have already been actively used in pornography, e-commerce and literal bank robberies. At the same time, experts have long been concerned about the fear that the same technology can be used to interfere in future elections, but according to one of the recent studies, such an outcome of events is much more difficult to implement than it might seem. Researchers at the Massachusetts Institute of Technology (MIT) have released a new report on whether political video clips can be compelling enough to find it’s not so simple.

“Overall, we find that individuals are more likely to believe an event occurred when it is presented in video versus textual form,” the study said, “[J]ust because video is more believable doesn’t mean that it can change people’s minds.” The researchers concluded that one deepfake video with the participation of a certain politician is unlikely to affect the political views of people more than a fake message about the same politician, which is already encountered now. The only advantage a video can have is the number of views it can potentially collect and the percentage of people who believe I can see what they saw with their own eyes.