The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

Deep neural networks (DNNs) provide significant assistance in processing of aerial imagery taken with the help of earth-observing satellite platforms. However, since DNNs are generally susceptible to adversarial attacks, it is believed that this may affect aerial imagery as well.

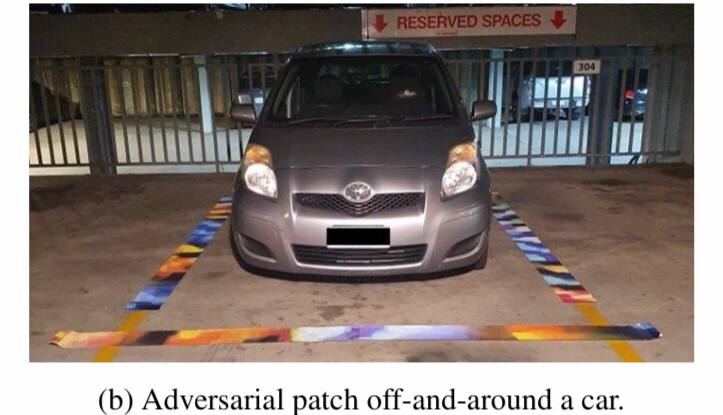

Researchers Andrew Du, Bo Chen, Tat-Jun Chin, Yee Wei Law, Michele Sasdelli and others present one of the first attempts to defend against physical attacks on aerial photographs. To this end, adversarial patches have been optimized, manufactured and installed on target objects, in this case cars in order to reduce the affect of an object detector. In particular, physical adversarial attacks on aerial images, especially those taken from satellite platforms, are challenged by atmospheric factors such as lighting or weather, and the large distance. With these complicating parameters in mind, researchers developed novel metrics to measure the effectiveness of physical adversarial attacks against object detectors in aerial scenes. According to the study, adversarial attacks pose a real threat to DNNs in aerial imagery.

Reinforcement learning policies are based on deep neural networks and are subject to subtle hostile influences on their inputs similar to network image classifiers. Therefore new attacks on reinforcement learning are here to come.

In this study, Ezgi Korkmaz examines the effect of adversarial learning on neural politics learned by an agent.

Two parallel approaches to the study of the outcomes of adversarial learning of deep neural policies are investigated. Approaches are based on worst case distributional bias and feature sensitivity; Some of the experiments are carried out on the OpenAI Atari environments. According to the researcher, the results may lay the foundation for understanding the relationship between adversarial learning and different beliefs about the reliability of neural policies.

Recent published attacks on deep neural networks (DNN) have highlighted the importance of methodologies and tools for assessing security risks when using this technology in mission-critical systems, as it can help establish trust and accelerate deep learning adoption in sensitive and security systems.

In this study, Doha Al Bared and Mohamed Nassar present a new method for protecting deep neural network classifiers and, in particular, convolutional ones. New Defense Method requires less computation power despite a small cost and refers to a novel technique called ML-LOO: the expensive pixel by pixel leave-one-out approach of the technique was replaced with the coarse-grained leave-one-out. According to the study, large gains in efficiency are possible even with a slight decrease in detection accuracy.