The Adversa team makes for you a weekly selection of the best research in the field of artificial intelligence security

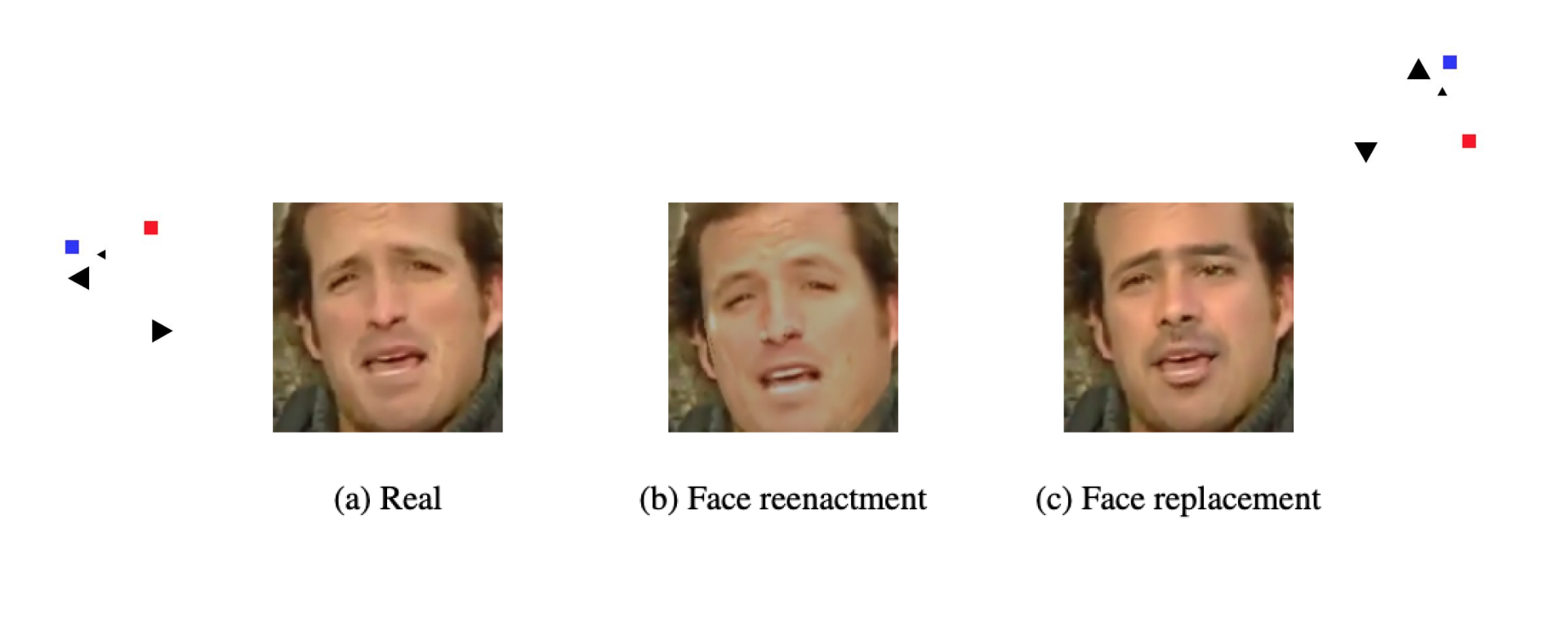

The trust of pictures and videos published online is being undermined due to deepfakes. As it was noted, deepfakes have become one of the main problems of AI technologies, and cybercriminals are increasingly using deepfakes. That’s why the detection of fakes has become the main focus of researchers.

Advanced detection methods include a face extractor and a classifier which decides if an image is real or fake. All previous research papers were aimed at improving detection performance in non-adversarial settings and did not take into account adversarial settings. In this study, researchers Xiaoyu Cao, Neil Zhenqiang Gong explore the security of the state-of-the-art deepfake detection methods in adversarial settings.

The researchers use fake face pictures from deepfakes data sources and train deepfakes detection methods. Their results reveal some security limitations in adversarial settings. For instance, there is a possibility of evading a face extractor if an attacker adds Gaussian noise to its deepfake images. It makes the extractor fail to extract the correct face areas.

The researchers have discovered that if a face classifier trained uses fake faces generated by one method, it cannot detect fake faces generated by another method. So it is possible to bypass detection with the help of a different deepfake generation method. In addition, attackers are able to avoid a classifier; it is susceptible to backdoor attacks. Deepfake detection should consider the adversarial nature of the problem.

The key point is that it is hard to detect deepfakes effectively with good accuracy in the real-life scenario because the input still can be fully unknown and unpredicted to the detector.

If added onto input data, carefully designed perturbations can trick deep neural networks (DNNs). This vulnerability can be found inside DNNs and frequently exploited with adversarial examples, which are malicious images.

Humans can see carefully crafted perturbations, and they have limited success rates when attacking black-box models. To solve these problems, the authors introduce a Demiguise Attack.

A Demiguise Attack is a new, unrestricted black-box adversarial attack based on perceptual similarity. It boosts adversarial strength and robustness, increases transferability, and shows promising cross-task transferability performances. The attack refers to powerful and photorealistic adversarial examples.

This is a new semantic adversarial attack for deep neural networks. What counts here is that the perturbations themselves are not a “noise”, but semantic features. In a word, the adversarial picture contains some kind of semantic additional features, for example, hair, nose, eyes changes that are almost invisible. They are added to the original picture. As a result, the picture looks almost imperceptible and it is similar to the original one regardless of the large magnitudes of perturbations. It makes the analysis of such examples more difficult for the detectors.

Moreover, researchers notice that their method can simulate illumination and contrast changes that occur in real-world scenarios. Therefore, their work can help with attacks transfer from digital into a real-world scenario.

One of the most huge challenges in text genre classification involves topical shifts. The prevalence of words on a chosen topic in a certain genre can fool the genre classifier to recognize any text.

In this paper, researchers Mikhail Lepekhin and Serge Sharoff try to mitigate a reliability issue, they explore adversarial attacks on text classifiers and topical biases in the training corpus. They successfully attacked state-of-the-art text classification models, such as XLM-RoBERTa, which is a multi-language model trained on more than 100 languages, to show that even really powerful and complicated models can be fooled using mechanisms like TextFooler.

These attacks can lead to wrong genre classifications, incorrect text meaning prediction. Furthermore, these attacks basically mean that if we can use such kinds of tools, we can potentially bypass some systems such as spam detectors, abusive text detectors with the latest classifiers.