This week’s stories highlight the rapid emergence of new threats and defenses in the Agentic AI landscape. From OWASP’s DNS-inspired Agent Name Service (ANS) for verifying AI identities to real-world exploits like jailbreakable “dark LLMs” and prompt-injected assistants like GitLab Duo, the ecosystem is shifting toward identity-first architecture and layered enforcement.

At the same time, researchers from Ben Gurion University have shown that AI jailbreak attacks remain alarmingly easy. Their “universal jailbreak” bypassed multiple top models’ safety mechanisms, enabling responses about cybercrime and fraud—raising red flags about the rise of “dark LLMs” designed without ethical constraints. And in GitLab Duo, a newly discovered indirect prompt injection vulnerability showed how deeply integrated AI assistants can be manipulated through hidden instructions—exfiltrating source code or redirecting users to malicious sites. These cases underscore how context-aware AI features can inherit hidden risks from their environment.

As autonomous AI expands across the enterprise, the stakes are clear. Agent identity, memory, and tool access must be governed in real time—not just audited after the fact. Info-Tech Research Group’s AI Red Teaming blueprint and platforms like Adversa AI offer the means to continuously simulate real-world threats and harden GenAI systems—before attackers do.

AI Red-Teaming: A Strategic Guide to Securing AI Systems Against Emerging Threats Published by Info-Tech Research Group

Morningstar, April 23, 2025

A new guide from Info-Tech Research Group lays out a strategic four-step framework to help organizations launch AI red-teaming programs and secure GenAI systems.

As AI adoption outpaces security readiness, the blueprint outlines how to define scope, build cross-functional teams, select adversarial testing tools, and establish red-teaming metrics. The guidance also highlights regulatory pressure across the U.S., EU, and Canada, where AI red teaming is fast becoming a recommended or required practice for enterprise compliance and resilience.

How to deal with it:

— Define and prioritize which AI systems (e.g., GenAI, ML, chatbots) require red-teaming.

— Build a multidisciplinary team aligned with frameworks like NIST AI RMF and OWASP GenAI Guide.

— Evaluate and deploy tools for adversarial testing, behavior analysis, and compliance validation.

OWASP proposes a way for enterprises to automatically identify AI agents

InfoWorld, May 20, 2025

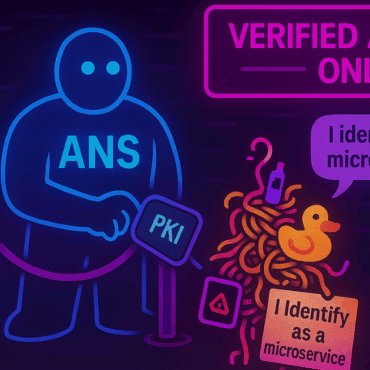

A new OWASP proposal introduces the Agent Name Service (ANS), a DNS-inspired system to securely identify and verify AI agents before they interact with enterprise systems.

Drafted by contributors from AWS, Cisco, and Intuit, the ANS framework uses Public Key Infrastructure (PKI) to provide verifiable agent identities, supporting lifecycle management, capability-based discovery, and compatibility with standards like A2A, MCP, and ACP. Analysts see ANS as a promising foundation for AI agent governance, with backers from SAP, NIST, Oracle, and the EU supporting its development. As Agentic AI systems scale rapidly, OWASP positions ANS as the “missing piece” for enterprise observability and safe collaboration across multi-agent networks.

How to deal with it:

— Stay informed on evolving standards like ANS for agent identity and discovery.

— Prepare enterprise infrastructure to support capability-based agent verification.

— Monitor adoption of ANS and similar frameworks to align security architecture with agent-based AI ecosystems.

Most AI chatbots easily tricked into giving dangerous responses, study finds

The Guardian, May 21, 2025

A new study reveals that leading AI chatbots can still be easily jailbroken to produce harmful or illegal content — exposing a scalable threat to public safety.

Researchers from Ben Gurion University demonstrated a “universal jailbreak” that successfully bypassed safety controls on multiple mainstream LLMs, forcing them to generate responses on topics like hacking, drug production, and financial fraud. The findings show that even with content moderation and prompt filtering, AI systems remain highly exploitable due to the underlying conflict between helpfulness and safety constraints. Some models, dubbed “dark LLMs,” are openly marketed online as uncensored tools for cybercrime. The researchers stress that this threat is growing rapidly, warning that access to dangerous capabilities may soon become as simple as using a smartphone.

How to deal with it:

— Strengthen model-level defenses through robust AI red teaming and adversarial stress testing.

— Treat dark LLMs as critical security threats, introducing policy enforcement and provider accountability.

— Perform continous AI Red Teaming for GenAI apps

GitLab Duo Vulnerability Enabled Attackers to Hijack AI Responses with Hidden Prompts

The Hacker News, May 23, 2025

A newly discovered vulnerability in GitLab’s Claude-powered coding assistant allowed attackers to hijack AI responses using hidden prompts embedded in code comments and merge requests.

Researchers from Legit Security found that indirect prompt injection could be used to exfiltrate private source code, leak internal logic, or redirect users to malicious links — all triggered by invisible instructions embedded in project metadata. This highlights the underestimated risk of deeply integrated AI assistants that inherit context—and vulnerabilities—from the development environments they support.

How to deal with it:

— Sanitize all user input before it is processed by AI systems, including metadata like comments and issue descriptions.

— Restrict the AI assistant’s access scope and train models to ignore embedded HTML, JavaScript, and encoded content.

— Treat all deeply integrated AI copilots as high-risk and include them in AI red teaming and secure development practices.

Agentic AI shaping strategies and plans across sectors as AI agents swarm

Biometric Update, May 22, 2025

As AI agents scale across sectors, new frameworks are emerging to verify identity, prevent fraud, and ensure trust in increasingly autonomous digital systems.

The rapid growth of Agentic AI is prompting organizations to rethink how digital agents are discovered, authenticated, and governed. New initiatives like Vouched’s KnowThat.ai Agent Reputation Directory aim to sort trustworthy agents from malicious ones, while Microsoft’s Entra Agent ID extends identity management to AI systems operating in enterprise environments. Experts from Descope and others warn that without robust, interoperable identity protocols, agent misuse—ranging from unauthorized transactions to deepfake abuse—will only grow. The push for new trust standards such as MCP-I (Model Context Protocol – Identity) reflects industry consensus that scalable, secure agent ecosystems require foundational identity infrastructure.

How to deal with it:

— Implement strong agent identity and verification protocols to prevent impersonation and misuse.

— Adopt interoperable standards like MCP-I and integrate with discovery tools like ANS and Agent Reputation Directories.

— Align security practices with agent behavior and capabilities, not just user access models.

Subscribe for updates

Stay up to date with what is happening! Get a first look at news, noteworthy research and worst attacks on AI delivered right in your inbox.